The afternoon session of the AI for Math workshop featured two invited talks, a panel discussion, a poster session, the workshop’s best paper award, and presentations from challenge winners.

PutnamBench: A Multilingual Competition-Mathematics Benchmark for Formal Theorem-Proving

The workshop’s best paper award was given to PutnamBench, which was presented by George Tsoukalas from UT Austin.

Tsoukalas gave a brief overview of existing benchmarks, and introduced PutnamBench as a “next-gen olympiad-style benchmark”. PutnamBench has several nice properties:

- it is multilingual, with every problem provided in Isabelle, Lean, and Coq as well as natural language

- it has relatively uniform difficulty

- it’s hard!

The problems in PutnamBench are sourced from the William Lowell Putnam Mathematical Competition for undergraduate students in the US and Canada, which has run annually since 1938. Previous Putnam Fellows (top 5 finishers) include Richard Feynman and three Fields medalists.

For more on PutnamBench, see the paper website, which includes a leaderboard listing various methods’ performance.

Progress or Regress? Self-Improvement Reversal in Post-training

The honorable mention award was given to “Progress or Regress? Self-Improvement Reversal in Post-training” and presented virtually by Ting Wu from Fudan University.

The paper introduces the concept of “Self-improvement Reversal” which the authors define as a phenomenon that occurs during iterative post-training, when improvements in pass@1 accuracy come along with a decline in broader capabilities like output diversity and out-of-distribution generalisation.

For more information, see the paper website.

Yilun Zhou: How and Where Do LLMs Fail on Math? A Multi-Faceted Evaluation on LLM Math Reasoning Skills

Yilun Zhou gave a talk on evaluating LLMs’ mathematical reasoning skills.

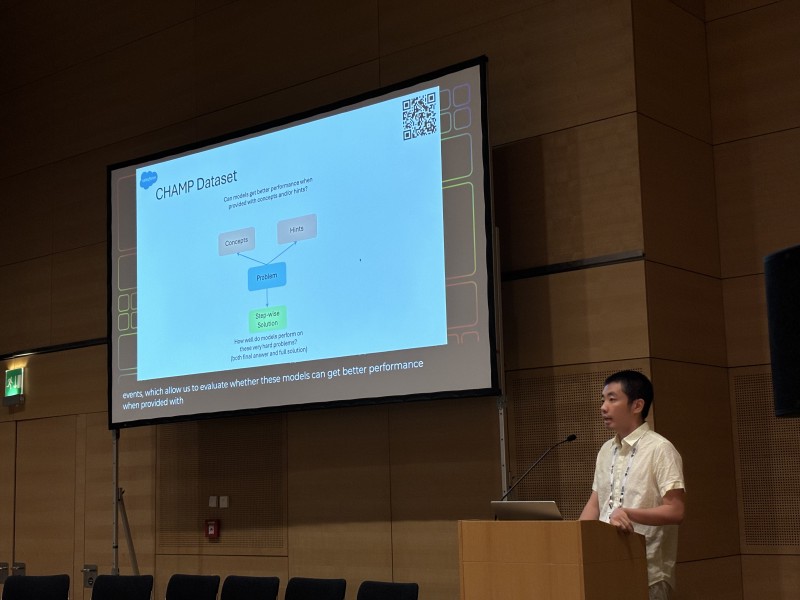

LLMs are getting better and their math benchmark performances are saturating — perhaps not surprising given the limited difficulty of the problems presented by many of them. Zhou walked through two examples in the MATH benchmark dataset, and introduced the CHAMP dataset created by him and co-authors Yujun Mao and Yoon Kim.

The CHAMP dataset contains “challenging high school competition-level math problems”, alongside hints for each problem and key concepts relevant to each problem — allowing for models to be evaluated on how well they are able to incorporate additional relevant information into their solutions.

More info on CHAMP, including a helpful dataset explorer tool, can be found on the CHAMP website.

Anima Anandkumar

Anima Anandkumar gave a brief remote talk covering her and collaborators’ work on tools including LeanDojo, which provides an LLM assistant in a Copilot model to aid in formal proof search. The Lean Copilot can be integrated in a user’s IDE, run locally or on the cloud, and provide suggested tactics/premises at any proof step.

A detailed diagram titled “Overview of LeanDojo” showing the interaction between Lean, a machine learning model, the LeanDojo Benchmark, and many other elements.](https://mlcontests.com/icml-2024/ai-for-math-workshop-afternoon/images/icml-2024-ai-for-math-leandojo_hu9201573095601383994.jpg)

One key difference between Lean Copilot and LLM-based programming assistants is that suggestions in Lean Copilot have already been vetted for correctness — a benefit of the formal and stepwise nature of Lean in comparison with programming in languages like Python.

For more information, see the LeanDojo website.

Panel Discussion

The workshop ended with a panel discussion followed by a poster session.

The organisers gathered answers to seven questions from the speakers before the workshop — on topics including the relative promise of different research paths and expected timelines for various milestones.

One question asked about the expected timeline for an AI system to obtain an IMO gold medal. After yesterday’s announcement from Google DeepMind showing a system that had narrowly missed out on a gold medal, almost all panelists agreed that this would be achieved at the next IMO, in 2025. The one holdout expected 2026, and cited the difficulty of formalising the combinatorics problems.

Panelists’ differences in opinion tended to be slight, and more due to nuances between “in principle” and “in practice” considerations. For example, most panelists seemed to believe that text-only models were sufficient (in principle) for achieving superhuman mathematical reasoning performance, but that in practice multi-modal models might allow us to get there more quickly.

The panel was followed by a brief poster session, cut short by our eviction as the venue closed.

Throughout the workshop, it was clear that AI for Mathematics is a field where significant progress is being made, and will continue to be made at a rapid pace in the near future.

This was the first AI for Math workshop at ICML, and hopefully the first of many!

This post gave an overview of the afternoon session. See the earlier post for the morning session. Another post will follow, describing the workshop challenges and winning solutions.

For more details on the speakers, challenges, and awards, visit the workshop website.