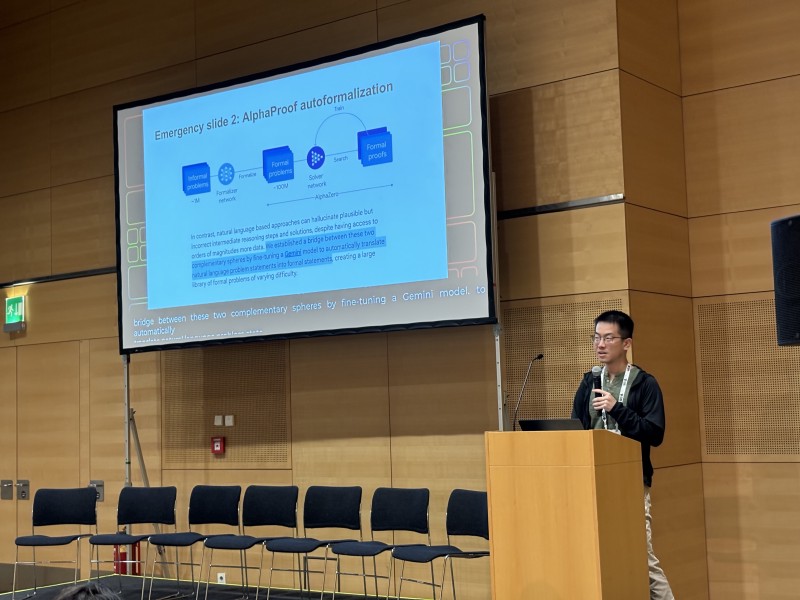

The ICML 2024 AI for Math(s) workshop (ICML page / workshop website) was auspiciously timed, starting the morning after Google DeepMind announced their IMO silver-medal-level systems, AlphaProof and AlphaGeometry 2 (Google DeepMind blog).

There were many great talks and posters in this workshop. This post covers the talks up to the lunch break, and will be followed by posts describing the competitions and afternoon talks.

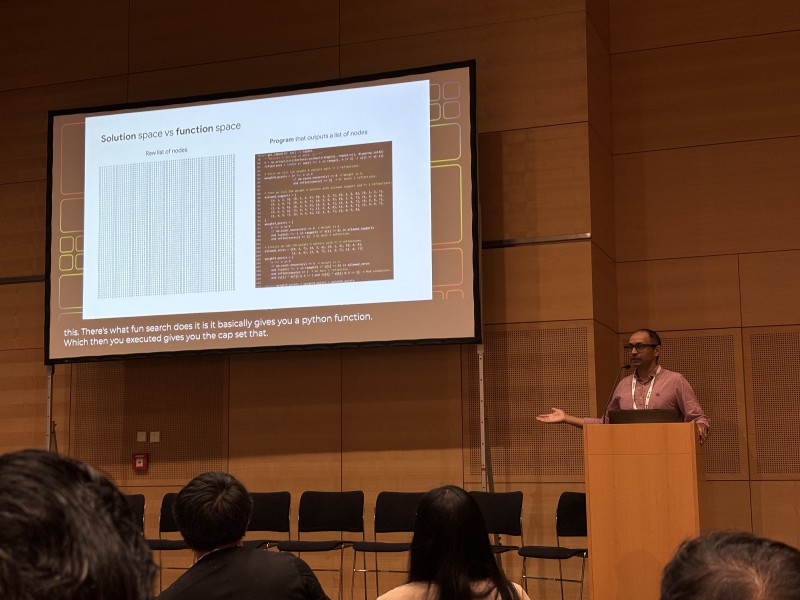

M. Pawan Kumar: Mathematical Discoveries from Program Search with Large Language Models

M. Pawan Kumar presented the FunSearch system, which has discovered novel results to difficult problems by searching in function space — through manipulating Python programs — as opposed to searching in solution space directly. An LLM is used to guide the evolutionary search process (generating candidate programs). Interestingly, using a better LLM didn’t tend to improve performance — possibly related to the candidate programs being short, and the prompts being simple.

Kumar described the requirements for this approach to be problems where we can:

- verify the correctness of solutions, and

- measure how good a solution is.

Both the cap set problem and the online bin packing problem meet these criteria (the former in a global sense, the latter conditional on a given distribution or dataset), and FunSearch was able to improve on existing results for these problems.

For more on FunSearch, see the Google DeepMind blog post describing the system.

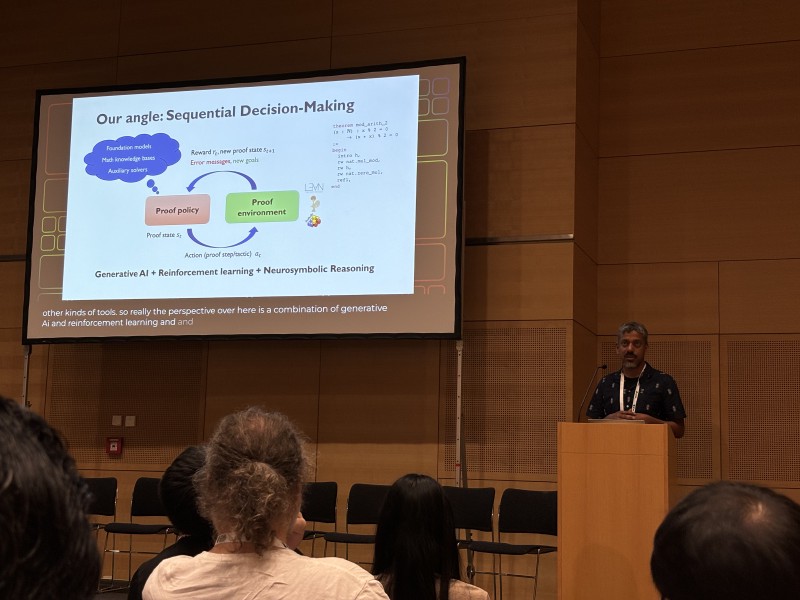

Swarat Chaudhuri: Language Model Agents for Formal Theorem-Proving

Swarat Chaudhuri’s talk introduced some recent trends in LLM agents for formal theorem-proving, and described his lab’s approach which uses reinforcement learning aided by generative models and neuro-symbolic reasoning.

He gave an overview of his lab’s COPRA agent (arxiv), which combines GPT-4 generation with augmentation, backtracking, and a lemma database. He also gave a preview of an upcoming paper which makes use of cross-language learning (Coq/Lean/Isabelle) to increase dataset diversity (e.g. induction proofs are more common in Coq software verification proofs than in Lean).

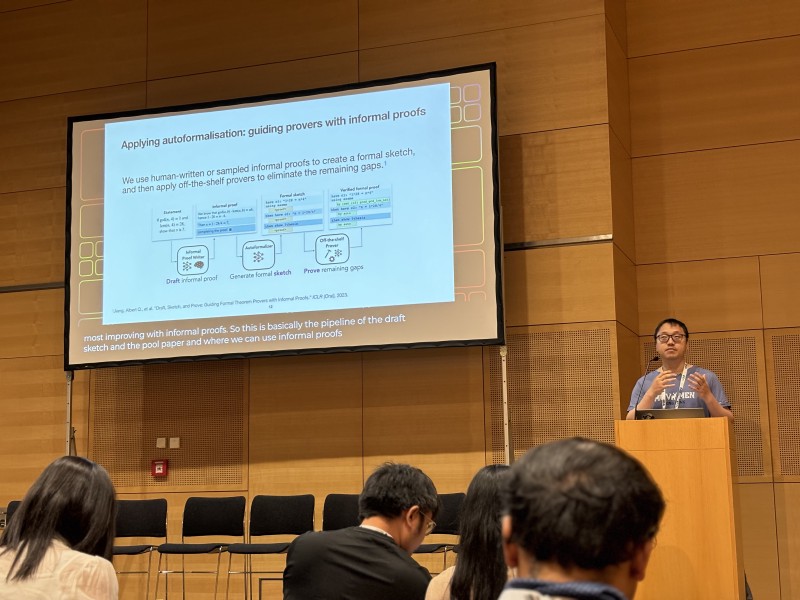

Wenda Li: From Pattern Matching and Beyond in Autoformalisation

Wenda Li’s talk started with some examples of the increasing interest in formal proofs from within the mathematics community, citing examples such as the Liquid Tensor Experiment and the Polynomial Freiman-Ruzsa conjecture.

While formal proofs have many advantages, manual formalisation is very labour-intensive and formal proofs are often less legible than informal proofs. Auto-(in)formalisation — being able to move from informal proofs to formal proofs and vice versa in an automated way — would mitigate these downsides.

Towards the end of the talk, Li covered the challenges of auto-formalisation, including the difficulty of mathematical formulation. As an example of this, he showed the IMO 2024 P5 — one of the problems AlphaProof failed to solve — and the formalised version (78 lines of Isabelle code) that took him over two hours to formulate.

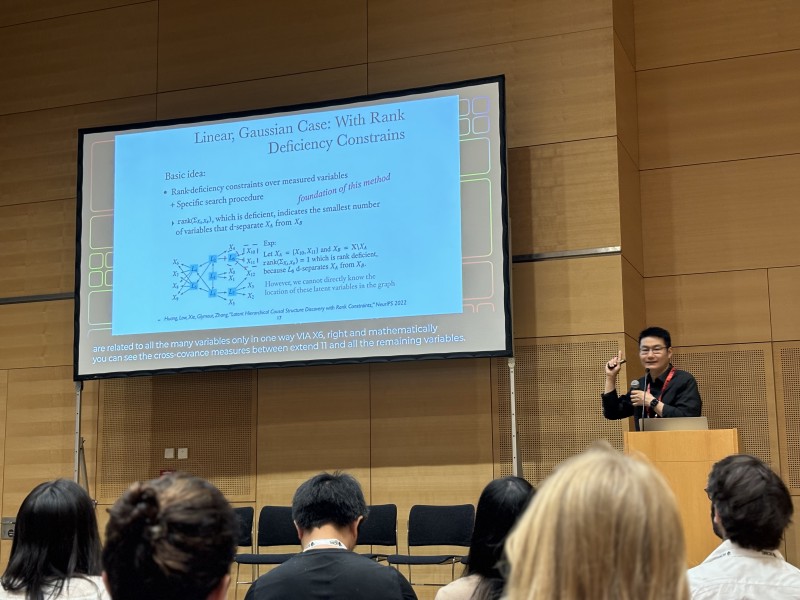

Kun Zhang: Causal Representation Learning: Uncovering the Hidden World

Kun Zhang’s talk gave an overview of causal representation learning techniques — learning a causal representation of a system, often in the form of a directed causal graph —using examples from archeology and psychology.

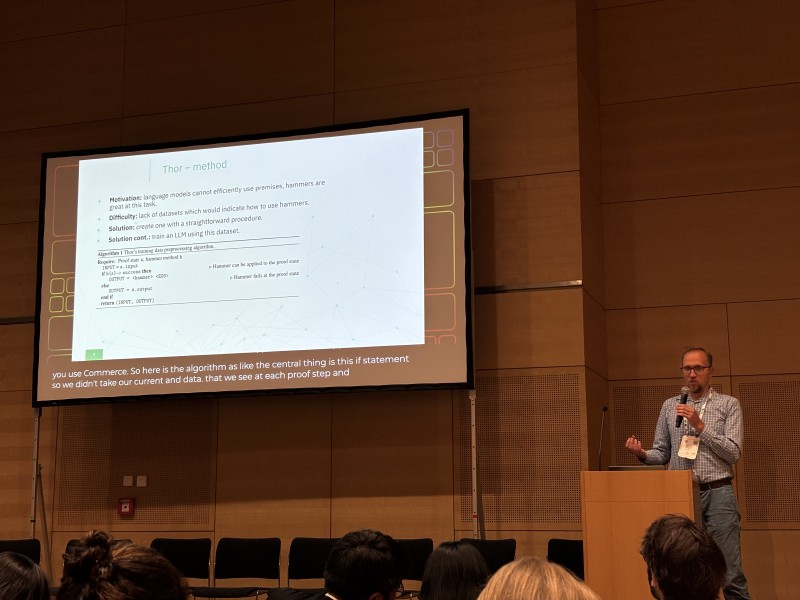

Piotr Miłoś: Hammers and Transformers

Piotr Miłoś’s talk introduced hammers — tools that “integrate proof assistants with external automated-theorem-provers (ATPs) to aid in finding and applying premises and proof methods”. One such commonly-used tool in the Isabelle language is called Sledgehammer.

Miłoś’s lab developed two new methods:

- Thor — a hybrid neuro-symbolic method, wihch leverages both learned methods (LLMs) and symbolic ATPs.

- Magnushammer — an alternative to hammers, using pre-trained transformers and contrastive learning.

Albert Q. Jiang: Synthetic Data in Mathematical Reasoning

Albert Q. Jiang’s talk started with a brief mention of two recent successes in automated mathematical reasoning using LLMs — Google DeepMind’s IMO silver medal and NuminaMath’s AIMO Progress Prize win.

However, he pointed out that LLMs are auto-regressive models — they generate new tokens based on past tokens — while many mathematical problems and datasets are structured in ways that are not well-suited to auto-regressive approaches.

For example, mathematicians generally write down and publish a final result which does not include their intermediate attempts or thinking process. After they build something, they tend to remove the scaffolding they used, making it hard to see how they arrived at their final result.

Jiang argued that synthetic data generation can be a key tool in mitigating these issues, and presented several techniques for generating both formal and informal data to this end.

One example of these techniques is what he calls rewriting: imagine a dataset of question-solution pairs where some written-up solutions start with an answer, followed by an explanation or proof. Rewriting the solution such that the explanation comes first and is followed by the answer makes it easier for auto-regressive models to learn from the data. This way it’s clear that the answer follows from the explanation, and it might be easier for these models to provide an answer once they have had the luxury of using multiple tokens’ compute to generate the explanation.