What does it take to win at competitive ML?

Just like last year, we’ve partnered with Eniola Olaleye to look back and analyse the previous year’s competitions. We’ll cover just the key points here—if you want to dive into the detail, check out Eniola’s full article here. You can also find him elsewhere on Medium, and on YouTube. He’s an experienced Zindi competitor (previously ranked #5 on the platform), so he knows what he’s talking about!

Note that we weren’t able to find all the information for each of the competition winners, so the various stats and charts represent different subsets of the data. For a more detailed look at the data, see Eniola’s blog post linked above.

Here are the highlights.

Platforms & Community

2021 saw 83 Machine Learning competitions taking place across 16 platforms, with total prize money of $2.7m.

Kaggle’s dominance continues. 30 out of 83 competitions last year took place on Kaggle, representing almost exactly half of the total prize money available. AIcrowd was second by number of competitions (19), and DrivenData was second by total prize money ($440k). We expect this to increase further this year with Kaggle’s new Community Competitions feature.

But it’s not all about Kaggle—there is a long tail of relevant platforms. 67 of the competitions took place on the top 5 platforms (Kaggle, AIcrowd, Tianchi, DrivenData, and Zindi), but there were 8 competitions which took place on platforms which only ran one competition last year, including Waymo’s $88k Open Dataset Challenge (which, incidentally, has just kicked off again for 2022!).

Most winners (74%) had won a competition before, slightly down from last year’s 80%, and 60% of winners were solo contributors!

Languages and Frameworks

96% of 2021’s competition winners used Python. The one exception used C++. Unlike 2021, we found no examples of winners using R, Weka, or any other languages or frameworks.

As expected, Pandas, NumPy, and scikit-learn featured heavily in most solutions.

For winners who used Deep Learning, PyTorch’s dominance increased slightly to 77% this year from 72% last year, in line with PyTorch’s increasing popularity in research.

Deep Learning… is there any other way?

Deep Learning dominated mostly, but not absolutely. An interesting paper published in November 2021 claimed “Tabular data: Deep Learning is not all you need”; showing that gradient-boosted tree methods like XGBoost continue to outperform Deep Learning methods on many tabular datasets, and prior research favouring Deep Learning had often used insufficiently tuned XGBoost models for comparison.

So—do we see this in competitive ML?

It’s a bit tricky, because many competitions don’t just use a basic tabular dataset. In 2021, roughly a third of competitions could be classified as Computer Vision, and all the solutions we found for these used a Convolutional Neural Net in some way. Out of these, EfficientNet-based architectures were most common. Fifteen percent of competitions required some form of Natural Language Processing, and (spoiler alert!) all solutions we found for these used Transformer-based architectures.

An interesting case study in the use of Deep Learning vs more traditional approaches is Microsoft and Kaggle’s Indoor Location & Navigation competition, where the first place solution performed significantly better than any of the others. This solution used an ensemble of models for different data modalities, including one where a combination of LightGBM and KNN outperformed neural-net based models. Even this solution ended up using a neural net to process one of the other modalities though!

One thing that’s clear is that outside of NLP we haven’t seen the total consolidation into Transformer-based models Andrej Karpathy has hinted at. Maybe we’ll see that next year?

Onwards: 2022

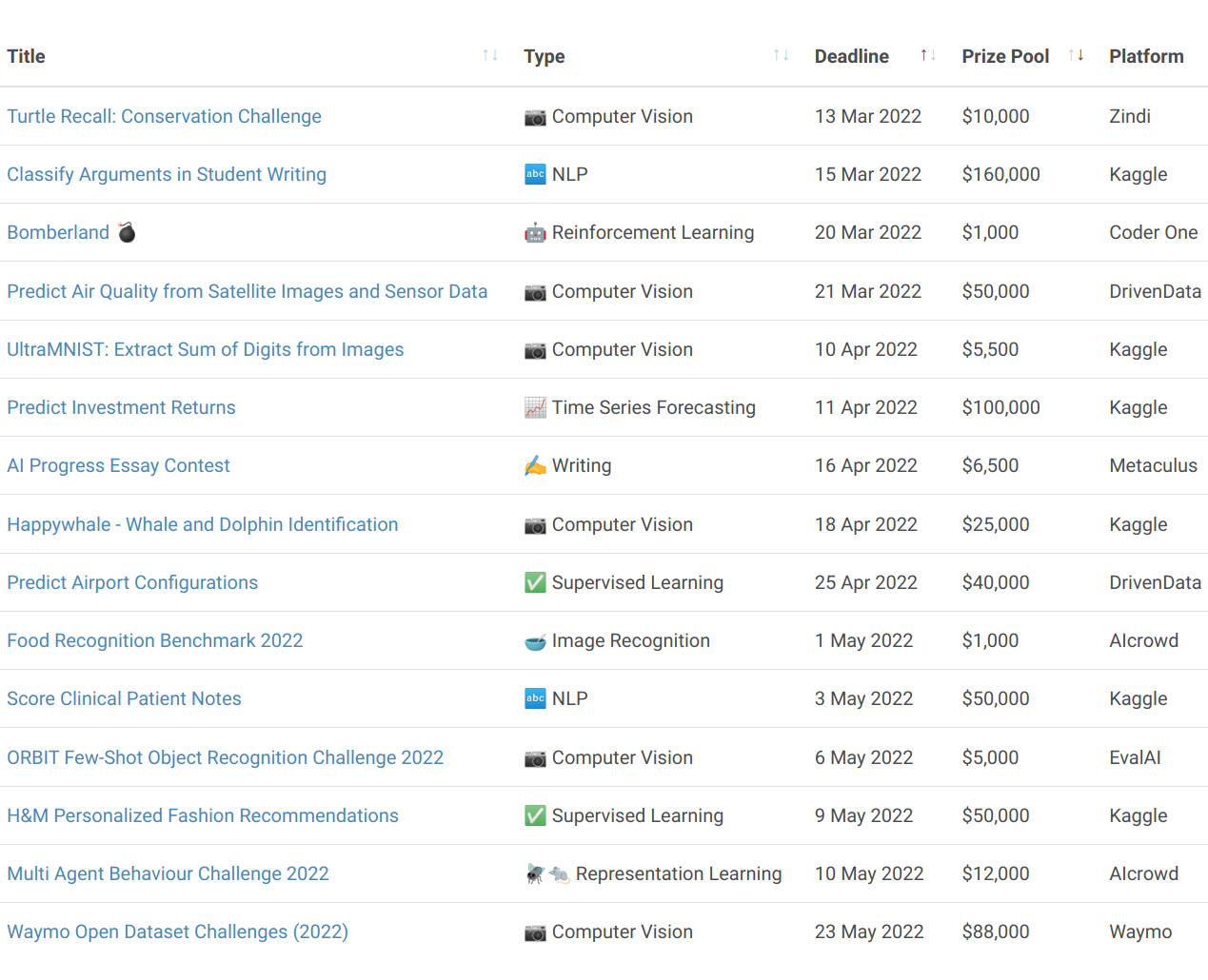

With this newfound knowledge of what works, let’s go forth and win some competitions this year! There are plenty out there. Right now there are 15 competitions live, with prize pools up to $160k.

If you’d like to get notified when new competitions launch or find others to team up with, join us in our Discord server.

GPUs: hardware cheaper, energy more expensive?

With lower crypto prices, GPU prices are down significantly on the highs of 2021. If you’ve been waiting to invest in your own deep learning hardware, this year might be the time to do so.

On the other hand, with energy prices rising across much of the world, the (sometimes significant) energy cost of training large models is increasing too.

Cloud GPU providers can be useful if you’re looking for occasional bursts of compute. Some of these, like Genesis Cloud, entirely run on renewable energy. Clever use of spot instances can also save you money when using larger cloud providers.

For more detail on GPU cloud providers including pricing, free credits, GPU models, check out our Cloud GPU Comparison page.