Developmental Robotics

In a way, thinking has been at the core of your work. Thinking about thinking, and thinking about learning, which relates closely to your later work on developmental robotics. Is there anything you can say about how you saw the developmental robotics field change during the last two decades?

Two to three decades ago, we believed that there were two main challenges with getting a robot to learn.

One was that it actually had to have a physical body. We believed that in order to have any kind of true artificial intelligence be able to think in the way that the human mind does, you have to have a body. That you’re not going to be able to understand ideas unless you have physical experience. So experience and embodiment were part of this philosophy that working in robotics could help solve.

A big thing that has changed is: we now know that you can do a lot with just words. You don’t need a body. Current large language models create very rich conceptual representations of words, and that was really surprising to me at first.

One of my first papers was about learning language and then being able to manipulate the representations of the language — reading in a sentence and turning it in from active voice to passive voice, or taking a sentence, getting an embedding, and then being able to take that embedding and maybe replace one word with another word, or swap the subject and object.

Today, large language models just basically read everything ever written, and they have a really rich conceptual space of language and ideas.

The other issue was: how could a robot continually learn? It’s going through the world and experiencing, but now we have to incorporate that data that it just experienced a few minutes ago into its ongoing learning process.

We focused a lot of energy on the idea of “online learning”. What we meant by that back in the 1990s and early 2000s was that as you are moving around the world experiencing, you’re also constantly training. I think it’s been shown that you don’t have to do that. You can collect all the data and you can occasionally retrain or fine-tune your system. I don’t know if that’s very human, but it’s very effective.

Having said that, I do think there’s some strong evidence that humans do a lot when they sleep, and there’s an analogy between that and model fine-tuning. There’s a point where at the end of the day, you can reflect on everything, compile it into updated weights, and then you’re ready for a new day.

We spent a lot of time working on both of those problems — [embodied AI and online learning] — and the world suggests that there might be different solutions that would allow us to make progress in those areas that we didn’t have at the time.

That’s really interesting. The opinions of people on where robotics is heading and where it is at the moment differ quite a lot, from thinking that robotics is practically a solved problem, to considerable pessimism. Where do you stand on this?

I’ve always seen all of this as a very long-term exploration, so I am surprised at the hype.

Some of it is deserved — and not just in robotics. Today, when you say “AI” to almost anybody, they think “deep learning”. Prior to 2015, “AI” meant symbolic reasoning, and people didn’t even really know whether machine learning should be thought of as part of AI, or as something different.

The hype doesn’t bother me too much except when people misunderstand what, say, chatbots can do. I heard someone at the grocery store talking about ChatGPT to a friend, describing it as: “I gave ChatGPT a question and then it went off and it did some research and it came back with this answer almost instantly”. The idea that it was off doing research is the most dangerous misunderstanding of chatbots and large language models. It’s fascinating what they do, but it’s so easy for people to misunderstand what they are doing and to believe that they can do something that they’re not capable of.

Thinking & Connectionism

Adaptive connectionist networks are often capable of detecting statistical regularities in the set of input patterns presented to them. After being suitably trained, connectionist networks are able to perform in reasonable ways when exposed to novel input patterns based on what they have learned during training. This ability, often called generalization, induction, or interpolation, allows a network to operate much more flexibly than a system that relies on explicit, rigid ‘rules’.

Your work with deep learning dates back to the 90s, when you decided to incorporate neural nets — or “adaptive connectionist networks” as they were sometimes called then — into your PhD research on learning and analogy-making. What made you so confident that these would work, when they had not had much success at that point?

My intuitions — and those of the people I worked with — turned out to be correct. It would have probably involved a lot of hubris and ego for me to put myself out there at the time and say that the one thing that I had picked to study was the way forward, but it was based on my understanding of the systems, and where I could see the technology going.

In my experience doing research early on there was a belief that we had to involve symbolic systems in some place. We were trying to span this gap between these neural network black boxes and some symbolic reasoning. And it felt obvious (at the time — I don’t believe it anymore!) that you had to combine those two systems in some way. But when we actually really tried to do those it always led to a dead end. It was always a limit that the symbolic system just failed us — there was no way to really connect these in a meaningful way.

So by around 2005-2010, I had completely given up on the idea of trying to create a hybrid system, and I still believe to this day that symbolic reasoning systems aren’t necessary. I believe that a neural system can handle it better. It can be more flexible. Trying to incorporate the old GOFAI (good old-fashioned AI) into modern systems is not useful.

I’m still as optimistic as I’ve always been about neural systems. I believe that we’re still on the right track. I believe that we will maybe sooner rather than later have machines that can actually think. Large language models (LLMs), I think, show a hint that thinking is possible, but I would not describe LLMs as thinking.

Obviously, LLMs go forward and just spit out one word at a time. There’s not an idea of “there’s a point that I want to get across and so I’m picking the words that will lead to that conclusion”. I mean, approximately they can generate text like that, but they don’t actually do it the way that humans do it, having a particular idea and then thinking about how to generate words that will lead people to that idea.

So there’s not thinking involved. There’s no counterfactualising in these models. It’s just, as it’s been described, being a stochastic parrot. And not to diminish that — I think there’s really a lot to say about what large language models do, and the concepts that they learn.

I think we’ll be able to take the ideas of transformers specifically, maybe adding a layer or two on top of that, and turning them into thinking machines.

Do you have an intuition for what it is that’s missing in these current approaches? Because you’ve sort of ruled out online learning. You’ve ruled out a symbolic reasoning component. Is it some kind of hierarchical system with lots of transformers? Is it something totally different?

I think the big thing that’s missing, and this is not a technical term, is a loop around the entire system. That’s what thinking is. It’s a process, an internal process. These current systems are as simple as my PhD thesis was: you take an input, you pull a crank, and it outputs this data. Thinking is much more flexible inside the system.

So there has to be a loop, an inner loop, that gives the system the ability to generate some ideas, to contemplate those ideas, and then to eventually generate something.

I have no idea what that technology is. I don’t want to go too far out on a limb, but I would just say it needs to be enclosed in a loop.

This concept of a loop that you’re describing — is that in line with Douglas Hofstadter’s idea of a “Strange Loop” — one that jumps levels of meaning?

Yes, that’s right. I think the idea of the Strange Loop is that it does cross boundaries and that’s the kind of flexibility that we hope for. And it’s important to understand that it’s not a loop like “for i in this number do…” — it’s much more like an emergent system where you can seed the system, and it’s able to bounce around a little bit, and then, maybe even artificially, coming up with a time point in saying “okay, time to stop, thinking is done. What’s your result?”.

Robotics Competitions

Coming back to robotics — one early instance where you used machine learning for robotics is in the 1999 AAAI Mobile Robot Competition, where your team’s Elektro robot won an innovation award.

We entered a couple competitions that year. The one that really inspired the students was the hors d’oeuvre serving competition. The main goal was: every year, thousands of AI researchers come together at AAAI and they have dinner and cocktails. The AAAI decided it would be fun to have the robots participate in the serving of food, and so we decided to tackle that problem.

We had a robot that would roll up to you and ask you if you wanted some M&Ms. If you did, it would ask you what colour you wanted. Using its camera and some mechanical devices, it would sort the M&Ms into the colour that you wanted and then provide those.

The innovation award you won was for using recurrent neural nets in Elektro’s voice recognition system, when most of the other entries used off-the-shelf voice recognition systems. What made you confident enough to try that?

I have to think back some 25 years… the speech recognition systems at the time were black boxes. A philosophy that ran throughout my education, my teaching of others and our research, was that wherever we can we want to open those black boxes and figure out how they work and build them ourselves if we can.

Doing speech recognition with the neural network was not all that effective at the time, but we wanted to explore that on our own. And that really comes back to what makes competitions valuable — having awards for innovation rather than just performance is part of a research philosophy that accepts that it may not be the best performing right now, but here’s an interesting idea and maybe it will inspire others to continue that research, and then at some point it will be the best performing.

And that highlights something that we don’t see enough in competitions today.

Competitions vs Challenges

A lot of competitions are based on performance — were you the best? But as you mentioned, the award that we won was not for doing something the best. We were trying to be creative.

This reflects my later experience of using competitions in education. Competitions are a double-edged sword. The idea of competition can be challenging and engaging to a lot of students, but it’s not engaging to all students. It can be motivating for some, and demotivating for others.

Early on I used competitions in the classroom a lot. In fact, my colleague Lisa Meeden and I wrote a paper about the efficacy of using competitions in the classroom. You can run competitions in very different ways, and I found that doing that in an all women’s college wasn’t necessarily the best way to motivate all students.

Is there a way in which you think competitions can be made more universally engaging?

The devil’s in the details of how the competition is run. We participated in a humanoid competition around 10 years ago, using the Pepper robot which is now owned by Softbank. We would not have been able to even start to compete with controlling a humanoid robot without the access we had to the winning results everybody shared from the previous year. This collaborative aspect is important.

For me it’s about putting the focus on challenge rather than competition. Competition implies that you’re competing — it’s “us against them” — whereas with a challenge it’s “us against the problem”.

I love the idea of a Grand Challenge — something that we as a community have identified as a problem worth solving, and having friendly competitions could be a really fascinating way to work on that. We just have to be thoughtful about how we do it, so that we don’t exclude potential scientists from helping solve the problem — those who are put off by the competitive framing, and who feel like they wouldn’t be able to compete.

Jupyter Notebooks

Another development which has helped bring more people into the field is the trend of using notebooks with server-side compute. It’s a nice way to create some equitable processing. If you have a substandard laptop and you’re taking a data science course, or you’re a data scientist and you work at home, or on a train or a plane, you can do that by logging into the cloud and doing that computation there.

You’ve been a big proponent of Jupyter — using it extensively in your own teaching, as well as contributing to related open-source projects and writing a book about how to use Jupyter for education.

I have, but it might surprise you to know that most of my teacher colleagues from all over the world are not fans of Jupyter notebooks. There’s a widespread fear of hidden state — that if you give someone a Jupyter notebook and they set x=4 in one cell and x=5 in another, they can very quickly get confused.

Because of this, computer science educators largely do not like Jupyter notebooks. I understand their concerns, but notebooks are a great way to tell a story, and telling stories is what all fields should be about. Especially computer science.

To me, a Jupyter notebook is a blank sheet of paper. You can write a story in it. And if you change the name of one of the characters in paragraph one, you have to change the name of the character throughout the whole story. Some educators feel that it’s too open-ended and too flexible, but I disagree — it’s a new way of doing computing and students don’t need to have their hands held at every point.

Students can definitely get themselves into trouble, but there are ways to mitigate that.

If you’re writing a book, one of the last things that you should do is reread the book from beginning to end to make sure it’s coherent. So the last thing that I do in any notebook that I’m writing is clear it all out, run it again from beginning to end, and make sure it still makes sense.

CS Education

Speaking of education — do you have any advice for people entering the fields of machine learning or robotics today?

It’s tricky, because my instinct as a professor over the years has been to use a very bottom-up approach, but I’m not sure that’s still the best way to learn today.

There was a line of education workflow back in the 80s and 90s where people advocated that you don’t do anything before you take computer hardware. And once you take that, you take Assembly language, and then once you take that you take C, and so on. That approach weeds out a lot of people who just don’t see the use of it.

At the other end of the spectrum, some people say: “if you’re going to get started in data science, just grab somebody else’s code and run it, and don’t worry about understanding it”.

I think my advice today would be: sure, go ahead and start with the most compelling example, generate some images, generate text, do something interesting and novel. Once you’ve done that, you can go up — for example, by talking about ethics and how this would be used, the implications, what parts of society are you affecting or are you leaving out, big ethical questions, philosophical questions. You can also go down — dive down into how the systems work, and understand some of the mathematics and computer science behind them. And then some students want to go even deeper.

The key is to attract students, show them the most exciting examples, grab their attention, get them engaged, and then dive into the science of it.

One also has to be open-minded, and willing to learn from students. In 1999, a student of mine was working on a C-based robot controller and they wanted to do a little demo. Every time they wanted to change something, they would have to recompile the C code. They found a wrapper that could take any C code and wrap it in Python, allowing for much faster iteration. I hadn’t heard of Python at this point, but after this student introduced me to Python, I used it to wrap a neural network system written in C. I think I may have been the first person to create a system that you could use to program neural networks in Python!

This system was called ConX — pronounced “connects” — short for connectionism, which was the phrase at the time for what we call deep learning today. Through that student’s work I was able to learn Python and then create the system. I fell in love with Python and we rewrote the entire system in pure Python. Today we [those ex-colleagues and I] have a wrapper around TensorFlow and Keras, because a lot of the ideas that we had in the early days are encapsulated by Keras.

Working at Comet

After several decades in education, you became one of the first employees at Comet, when it was maybe a tenth the size it is now. I’d love to know — what were the early days like, and how have things changed since then?

Five years ago, I’d just retired from being a professor of Computer Science and Cognitive Science at Bryn Mawr College, a women’s college outside of Philadelphia, and I wasn’t really sure what to do next.

Part of my motivation for retiring was to explore what could be done with modern tools and machine learning. It was the edge of this new wave of deep learning and transformers, built on the same tools that I did my PhD on, but going in really novel directions and being able to train on huge data sets. I was involved in the Jupyter project and Gideon Mendels, the CEO of Comet, emailed me with a fairly mundane question about Jupyter notebooks. After I answered this question Gideon said, “I have this machine learning startup. I’d like to talk to you”. So I drove up to New York.

At this point I didn’t know that they were doing work on machine learning; I thought they were just interested in Jupyter notebooks. But it turned out that we had a lot in common and we hit it off really well and I joined the team almost immediately.

When I joined Comet there were still a ton of things that needed to be done across the board, so I wore many hats. I immediately jumped into the Python codebase, but I also rewrote and organised the documentation at that time and I started jumping into hard tasks that spanned not only the machine learning ideas, but also technology and data representations, bringing it all together to make a useful tool for data scientists.

Now I am firmly in the area of research and I split my time still across a variety of different things. I still have a hand in reviewing documentation. I still work with the engineering team. I oftentimes will create a prototype of an idea, and then hand it off to the engineering team. I work with the product team, and also with customers, answering questions, listening to their needs, and then helping coordinate the requests with the product team and creating something new and interesting and useful.

You’ve worked with various different Comet customers, and also in the past with lots of different teams doing machine learning research. Where do you think the productivity bottleneck is in most people’s machine learning processes?

That’s a huge question; it spans so many different problems and topics and workflows. One thing that was surprising to me about the way that Comet has been used is that it’s used by many different layers of people in the organisation. From people who are monitoring the amount of energy used, to the people who are monitoring the amount of money spent on compute, to the people actually running the experiments. I think the thing that maybe stands out is just the idea of communication — being able to transform all of the data that is coming in for various purposes into useful information for the entire organisation.

That’s a thirty-thousand foot view answer. But of course, the technology is changing every day and just wrestling with the details of particular machine learning frameworks is an ongoing battle. In addition to that, what people are interested in is changing. Being able to dive into a particular model or in the processing of a model and pull out the relevant parts to create visualisations is another aspect of the entire endeavour that’s challenging and really interesting.

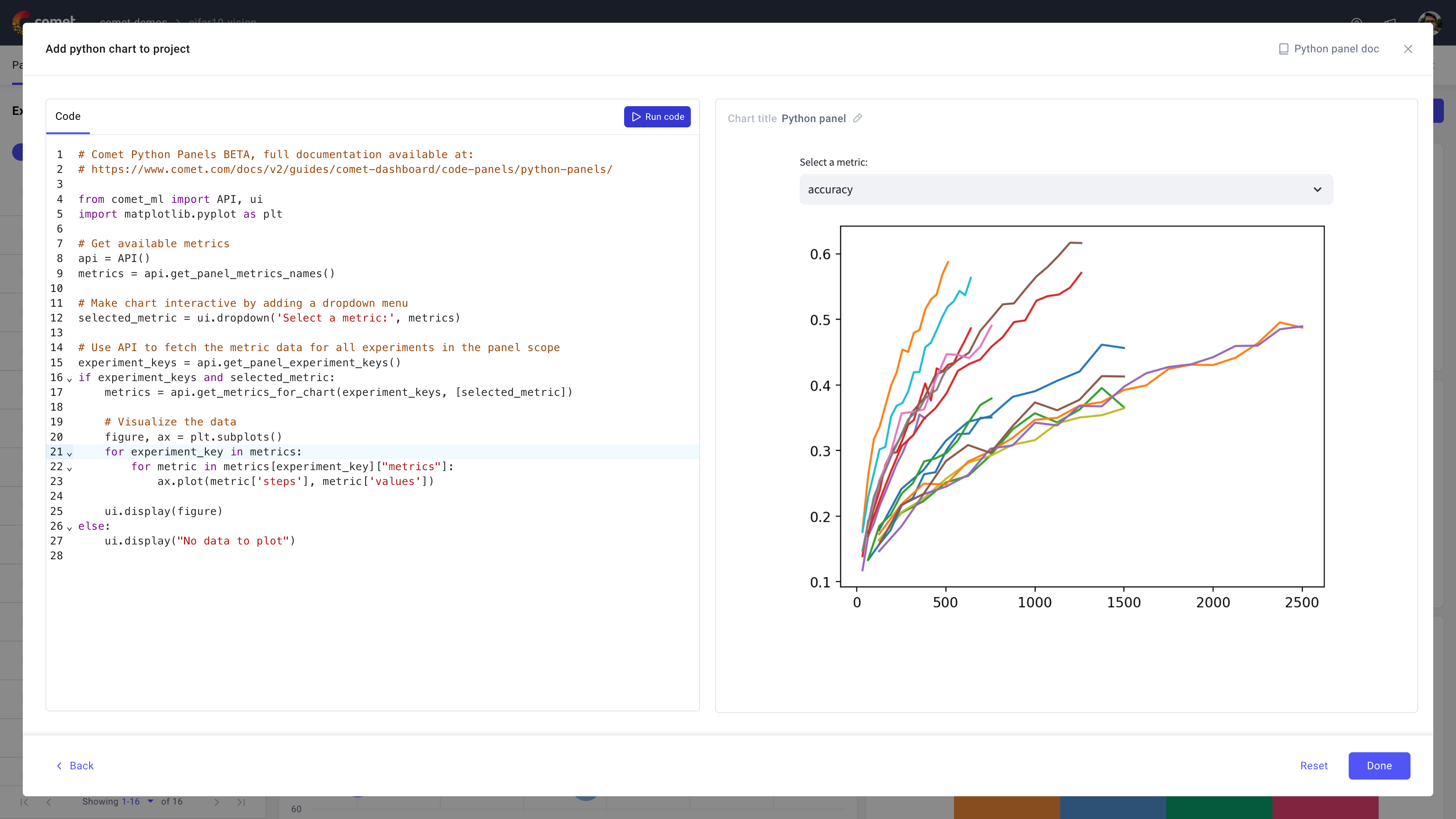

That visualisation aspect relates closely to a feature I think you contributed to — the custom Python Panels. Could you talk a bit about that feature, and also the kind of workflows that enables?

The basic idea is: lots of people use Comet to create graphs for inclusion in a report or publication. Before we had Python Panels, you would have to know exactly what chart you wanted to create, and set up the right data logging before running your experiment.

Python Panels moves that analysis and thinking to afterwards. Now you can run your experiment, and then sit back and look at the data. You can try a particular visualisation, and tweak it after the fact. So it occupies a very different spot in the data scientist’s workflow — enabling them to have access to all the same data they had at the time of running the experiment, after the experiment, and easily create visualisations and reports on the fly.

I think that was one of the most interesting and different projects that I’ve worked on at Comet, just because it creates a new way of thinking, a new spot in the workflow that wasn’t possible before.

I love my job at Comet. I think at my core I’m a problem solver, and so the main hat that I wear as Head of Research at Comet just puts me in a position where I can help solve problems of all kinds.

I’ve been involved in some really big things at Comet — such as building a hyperparameter optimizer from scratch — to small things like really beautiful and elegant confusion matrix viewers or histograms.

That’s a challenge — how to do it technologically, how to do it quickly, and then how to make it look really good — and I enjoy working with the engineering team and the product team to solve both of those sides of the puzzle.

In some ways my life is not all that different from being a professor. I was always building little pieces of software to help teach,and working with students has always been really engaging for me.

That’s probably the only thing that I really miss about not being a professor anymore. Although I get to teach at Comet every once in a while too — I just love the students experiencing some of the ideas for the first time. It inspires me to this day, just to help build things that make things more understandable. To get more people involved, and engage them in this activity of science.

Comet builds tools that help data scientists, engineers, and team leaders accelerate and optimize machine learning and deep learning models. Comet’s Experiment Tracking and Model Production Monitoring tools work with your existing infrastructure to track, visualize, debug, and compare model runs from training straight through to production.

Use Comet’s Artifacts, data lineage, and Model Registry as a single source of truth for all your model training runs and trace bugs in production all the way back to the data the model was trained on.

For prompt engineering workflows, use Comet LLM, an open source tool to track, visualize, and evaluate your prompts, chains, variables, and outputs.

Create a free account to try Comet’s Experiment Management, Model Registry, and Prompt Management solutions.

Comet sponsored ML Contests’ State of Competitive ML (2023) report.