There were many great workshops on Saturday, just as there were on Friday. While the venue as a whole felt a little less busy, all the workshops I saw were very well-attended.

Based on the numbers in the conference app, the most popular workshops on Saturday were Geometry-grounded Representation Learning and Generative Modelling (workshop site) and Theoretical Foundations of Foundation Models (workshop site).

The most popular workshops on Friday had been the AI for Science (workshop site) and ML For Life and Material Science (workshop site) workshops.

At various times, people were no longer allowed into the Mechanistic Interpretability workshop or the LLMs and Cognition workshop due to the rooms being too busy.

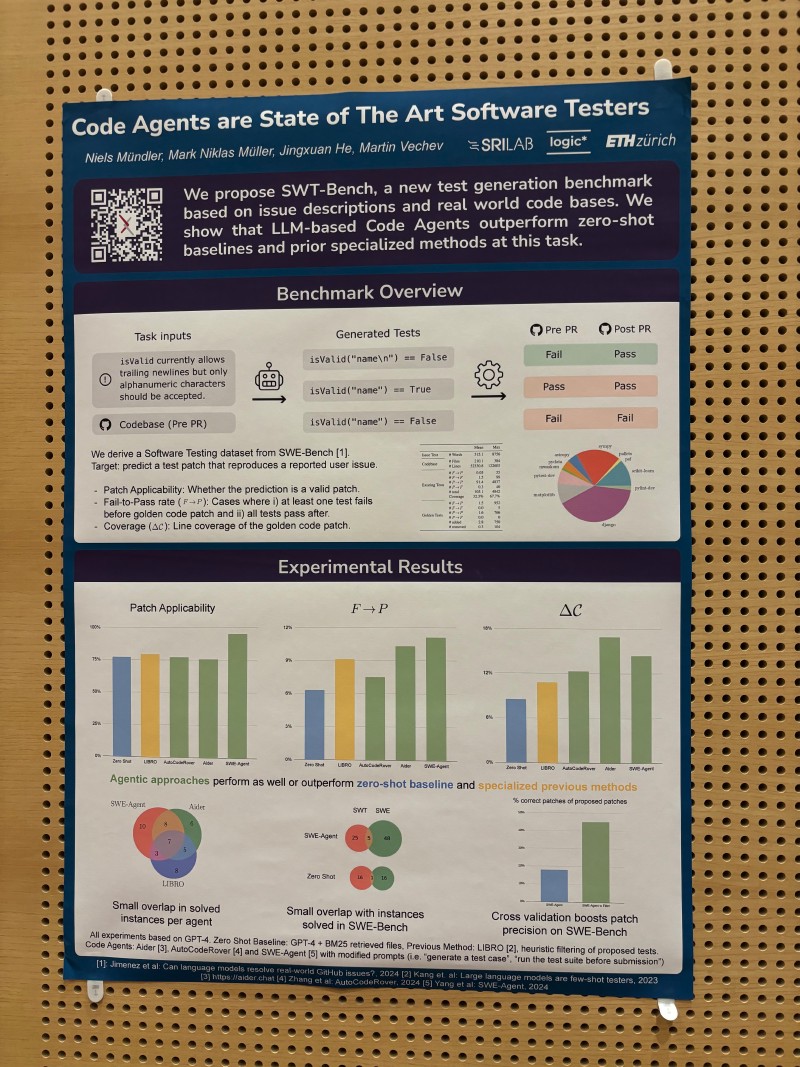

There were so many great papers and posters! To pick just one, I spoke to the authors of the “Code Agents are SOTA Software Testers” paper. They took SWE-Bench (arxiv), a dataset designed for testing code fixing ability, and used it to build SWT-Bench — a benchmark for test generation.

The original SWE-Bench collected GitHub issues, along with the code patches applied to fix them. SWT-Bench flips this around, requiring a test to be written for a given GitHub issue. The proposed test is then validated: correct tests should fail on the pre-patch codebase, and pass on the codebase with the patch applied.