Tuesday morning oral sessions included alignment, “positions on how we do ML research”, clustering, video, time series, and bio/chemistry applications.

I managed to catch most of the time series talks (link).

The Arrows of Time for Large Language Models (arxiv link) talk showed that, across many languages, LLMs are better at next-token-prediction in a forward direction than in a backward direction.

There were two talks showing new transformer-based approaches to time-series modelling.

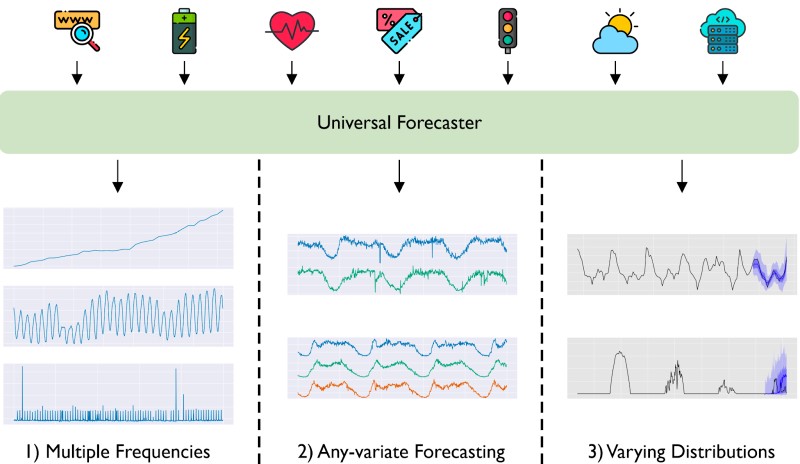

MOIRAI (salesforce blog; arxiv) aims to be a universal time-series foundation model, through innovations including dynamic patch sizing and any-variate attention (a custom attention mechanism with several properties desirable for time-series).

By pre-training on datasets of various frequencies and with differing numbers of variables, MOIRAI aims to outperform other existing methods.

Alongside the paper, the MOIRAI team released the LOTSA (“Large-scale Open Time Series Archive”) dataset (Hugging Face link) containing a collection of open time-series datasets.

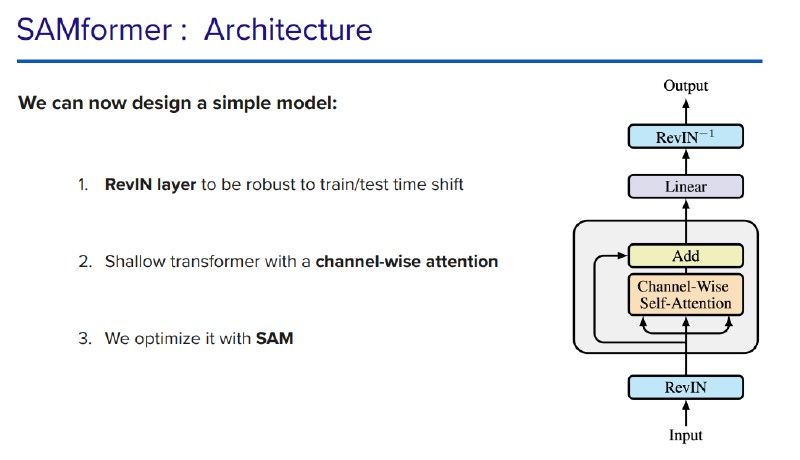

SAMformer (link to slides; arxiv) puts the lack of transformer success in time-series prediction down to the problem of sharpness — the presence of sharp local minima in the loss landscape leading to overfitting.

SAMformer fixes this by using Sharpness-Aware Minimisation (which smooths the loss landscape) to optimise a custom architecture combining channel-wise attention with a RevIN layer, and also reports an improvement over other transformer-based models while being on par with MOIRAI.