Chelsea Finn’s talk (link) shared insights on how to make progress in ML from a robotics perspective.

In particular, Finn addressed the issue that ML is data-hungry, and data can be expensive or difficult to obtain. She detailed three strategies she’s used in robotics, and how those can be applied in ML.

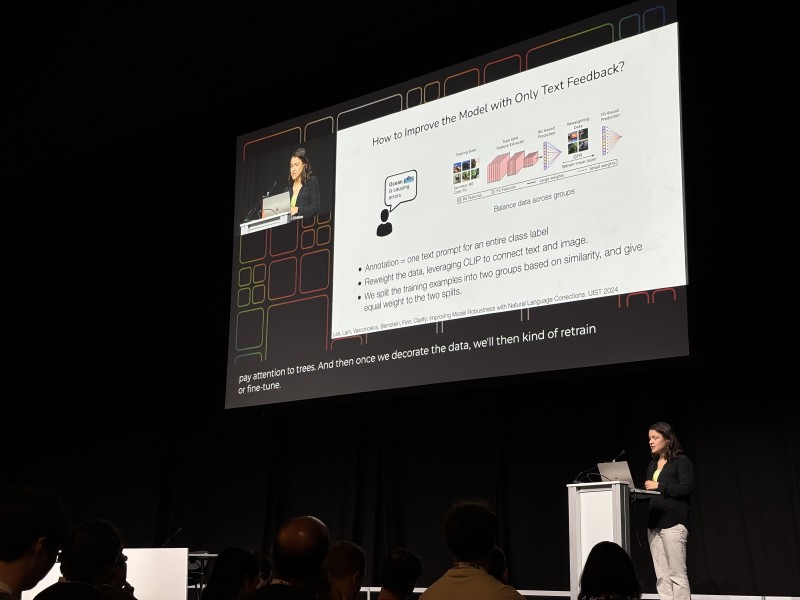

The main strategy she highlighted was the use of natural supervision — providing a trained model with limited natural language feedback that can be incorporated to improve future predictions.

In the Yell at your robot paper, Finn’s lab applied this to robotics, by building upon an existing approach which splits robot policies into a high-level (what to do) and low-level (how to do it) components, and uses natural language as an interface between the two components. Examples of high-level strategy command could be “move right” or “pick up the bag”.

Their innovation here was to freeze the low-level policy, and keep training the high-level policy — which can be done by providing text suggestions for a given previously-experienced state, and does not require additional robot demonstrations or interaction with a real-world environment.

In their CLARIFY paper, they applied a similar approach to image classification. Here a human observes a model’s failure modes, and can give targeted concept-level descriptions of scenarios where the model does not perform well. For example, a shape classifier which has incorrectly learnt to classify red shapes because of spurious correlations in the training data (most of the red shapes in training happened to be circles) can be given the feedback “red”. The CLARIFY procedure then fine-tunes the model on a dataset reweighted based on examples’ image-text similarity with the provided phrase (using CLIP), making it easier for the model to learn the correct rule for red shapes.

This seems to be a promising tool with many potential applications, and its use of global (concept-level) feedback contrasts the local (datapoint-level) feedback that is standard in reinforcement learning. The process of providing this feedback also seems more interesting (as well as easier/cheaper) than standard data-labeling tasks!

More data is out there, or easy to get, [we] just need algorithms that can use it well!

Two other approaches were covered — leveraging other data sources (e.g., using a pre-trained vision-to-text model to come up with robot strategies based on vision data), and incorporating data from test-time (in-context learning).

Papers mentioned: