The Data-centric Machine Learning Research (DMLR) workshop this year focused on the theme of “Datasets for Foundation Models” (ICML link / Workshop website).

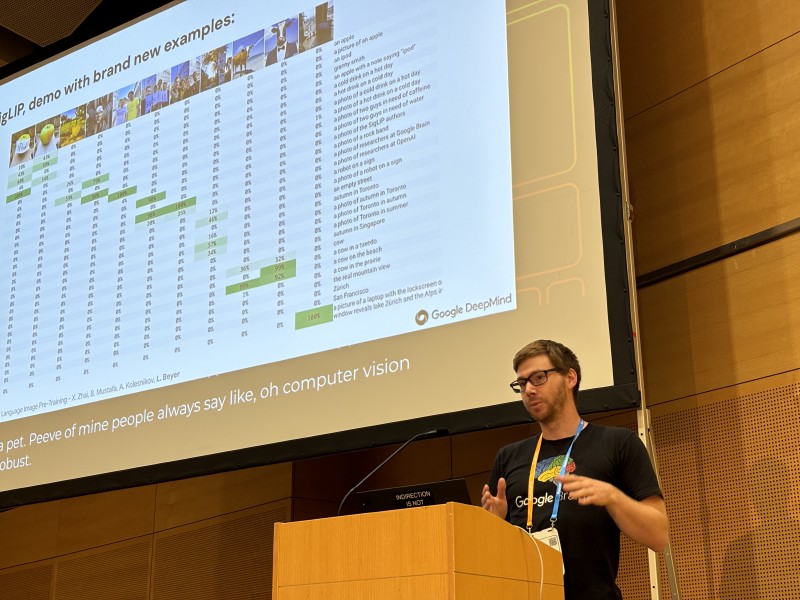

Lucas Beyer’s talk on “Vision in the age of LLMs — a data-centric perspective” made the case for language as a universal API.

He described the shift that had happened in computer vision modelling in recent years. The “classic” way to do image classification is to take an image as input, and output a probability distribution over a pre-specified set of labels (e.g. hotdog/not hotdog, or the 1,000 classes in ImageNet).

More recently, with models like OpenAI’s CLIP (arxiv) and Google DeepMind’s

SigLIP (arxiv) models — the latter of which Beyer contributed to — can

be presented with an image and a set of natural-language phrases, effectively allowing them to be used as a zero-shot

classifier based on which phrase is “most likely” to accompany the image.

Lucas showed how this approach can be extended further. Models like PaliGemma (arxiv / Google blog), for which he led the development, take an (image, text) pair and output text. With sufficient pre-training and some preference tuning using reinforcement learning, general models like PaliGemma can be applied to many tasks which previously required specific model architectures with their own from-scratch training.

Some examples of tasks include visual question answering (input image + text question, output text answer), image object detection/segmentation (output coordinates of bounding boxes in text), and image captioning.

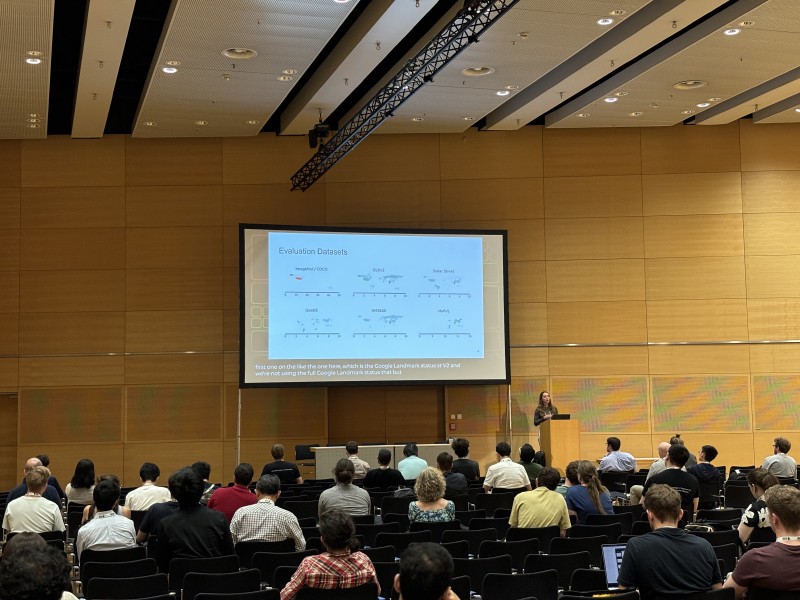

Beyer’s talk was followed by a brief talk from Angéline Pouget, describing the No Filter paper that Pouget, Beyer, and others published earlier this year (arxiv).

Pouget’s work pointed out the that the common technique of filtering training data to include only English-language image-text tuples harms the trained model’s performance on many tasks. For example, models trained on filtered data mis-identifying the Milad Tower in Iran as the CN Tower in Canada.

This effect is exacerbated by the incentives to perform well on popular Western-centric ImageNet and COCO datasets, though Pouget’s work showed that pretraining on global data before fine-tuning on English-only content can improve global performance without sacrificing performance on those benchmarks.

Despite that, her paper notes that there remain some trade-offs between optimising for English-only performance and performance on more culturally diverse data, highlighting the insufficiency of benchmarks like ImageNet for global use.

An anecdote from Beyer’s earlier talk shed some light on how these datasets came to be the way they are — he explained that when the popular COCO (common objects in context) dataset was being created, the creators (based in the US) asked their children for a list of common objects that came to mind. The 80 object classes include “frisbee”, “snowboard”, “skis”, “hot dog”, “pizza”, and both “baseball glove” and “baseball bat”.

For more on these talks and others in the DMLR workshop on datasets for foundation models, visit the workshop site.