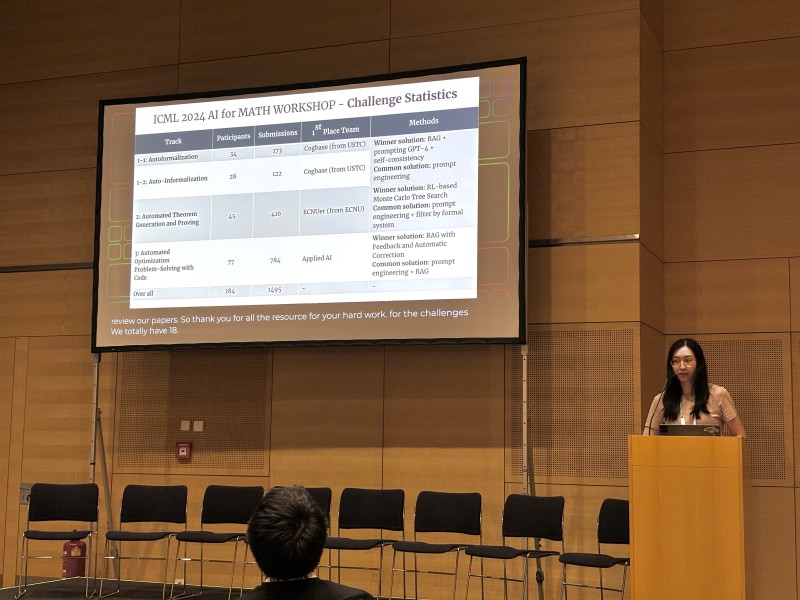

The ICML 2024 AI for Math workshop hosted three challenge tracks. In total, the challenge tracks had 184 participants and 1,495 submissions.

More information on each of the challenge tracks can be found on the workshop site.

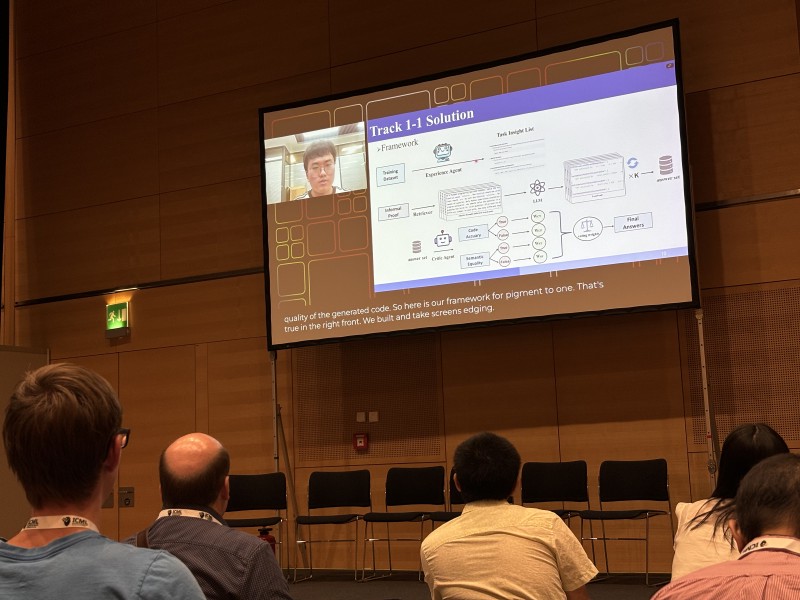

Zhiyuan Ma: Challenge Track 1

Track 1 tested auto-formalisation and auto-informalisation of proofs, into/from Lean 3.

The data for the competition included problems from the public MUSTARD dataset (arxiv), as well as some additional (unseen) annotated IMO examples for the test set.

Zhiyuan Ma from the winning Cogbase team from USTC presented the winning solution, which involved using retrieval-augmented-generation (RAG) using GPT-4, coupled with a critic agent which judged candidate solutions on accuracy and semantic similarity to the problem.

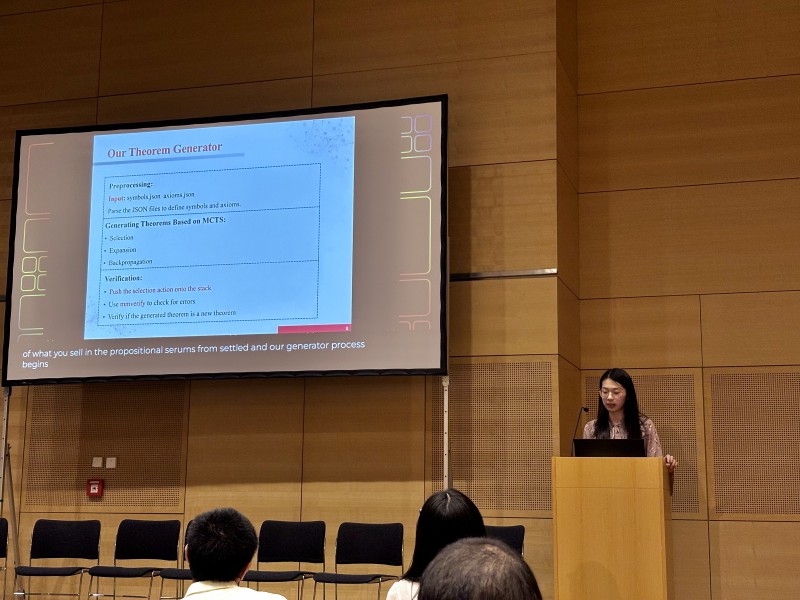

Challenge Track 2

Track 2 involved automated theorem generation and proving. Participants were provided with a set of axioms and symbols in Metamath from the ATG dataset (arxiv), and tasked to generate reusable theorems which reduce proof steps.

Lei Wang from the ECNU team presented their winning solution, which was based on monte carlo tree search through selection, expansion, and backpropagation steps.

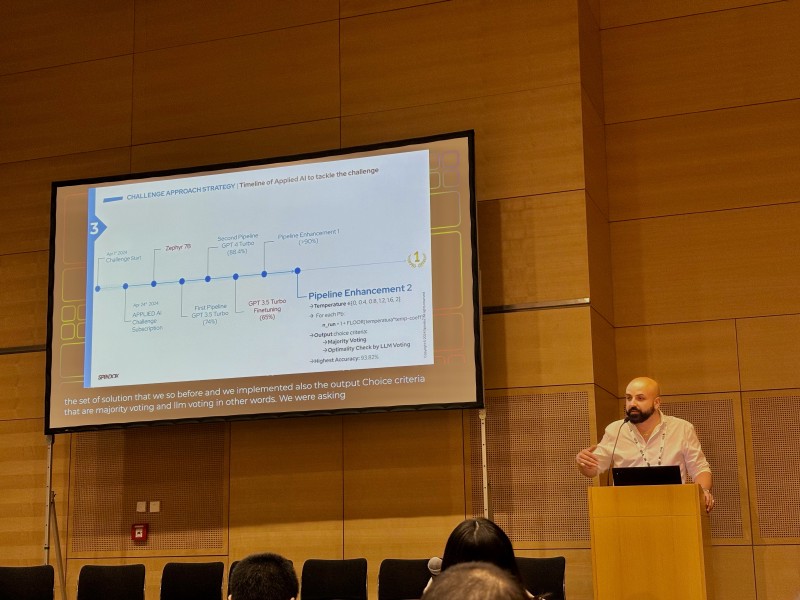

Challenge Track 3

Track three was an operations research task, and involved taking an optimisation problem stated in natural language, generating code to solve the problem (solver libraries allowed), and outputting a numerical solution. Each problem was designed to have a unique optimal solution, making evaluation easy.

This track was won by a corporate team from Spindox, and presented by Francesco Romito and Raffaele Mariosa.

Raffaele reviewed the team’s approach, as well as how it evolved over time — starting by trying the open-source Zephyr-7B model, before replacing it with GPT-3.5 Turbo (improvement), then replacing it with GPT-4 Turbo (improvement), trying GPT-3.5 Turbo with fine-tuning (regression), and in the end focusing on GPT-4 Turbo with a few pipeline enhancements.

Their pipeline enhancements involved prompt engineering for specific situations, iterating on solution code through generation-correction loops taking into account error information returned by the Python interpreter, sampling multiple temperature values, and then choosing from among multiple candidates using majority-voting.

The code for their solution is available in their GitHub repository.