Tuesday was the first day of the main conference program. In this update I cover the two invited talks on Responsible AI and Cognitive Development, the Test of Time Award talk, the Efficient Learning oral session, books at NeurIPS, and some exciting news from Jeremy Howard.

The Many Faces of Responsible AI

Lora Aroyo’s talk examined some common assumptions in ML, and whether they make sense.

The key assumption she questioned is the use of binary ground-truth labels — for example, labelling an image as either belonging to a class, or not.

Does it make sense to assume all data instances can be labelled in a binary way? And even generalising to multi-class categorical labels, does it make sense to say that one training example belongs, with certainty, to a particular class? What about when different human labelers disagree?

Often in this case, the disagreement is treated as noise — for example, labelers making a mistake. But actually, there’s signal in disagreement, and this signal is valuable in learning. If class labels are treated as probabilistic, often stable distributions emerge across crowds of raters. And when that happens, the variance and bias is informative. Collapsing it down to one class being “true” and the others “false” loses a lot of information and doesn’t represent the real world.

Further reading:

- Lora Aroyo’s homepage

- The Three Sides of CrowdTruth (2014)

- The Reasonable Effectiveness of Diverse Evaluation Data (2023)

- Data Excellence for AI: Why Should You Care (2021)

Coherence statistics, self-generated experience and why young humans are much smarter than current AI

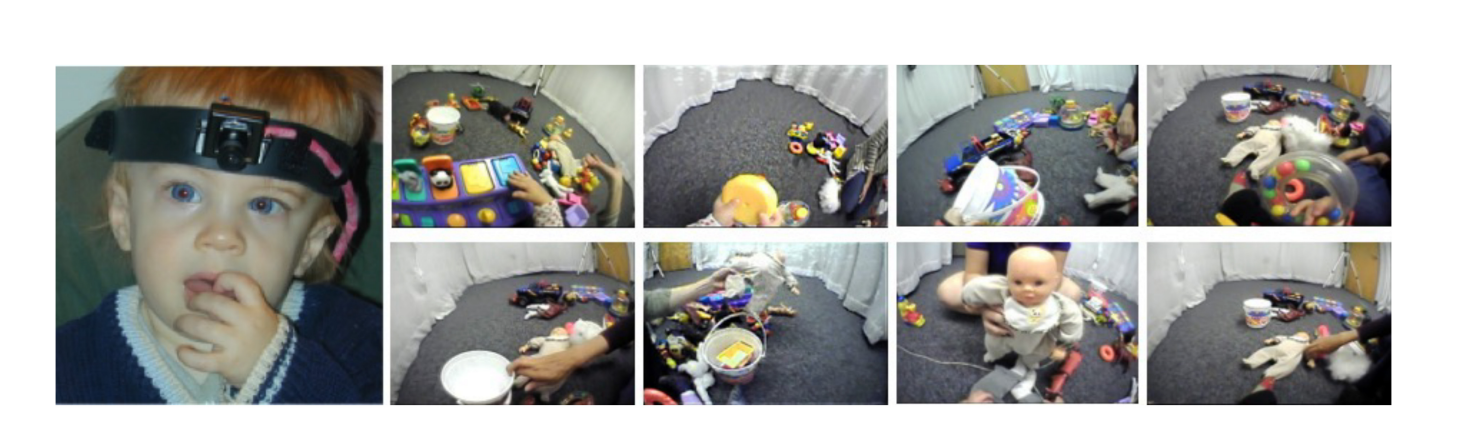

Linda Smith’s talk looked at learning through a cognitive development lens, using data gathered from babies and infants in a lab setting (using various sensors) and at home (using head-mounted cameras on parents and children).

Her research uses this data to explore the question: how do infants and young children learn from the sparse data that makes up their real-world experience?

An example of this sparsity is learning labels for new objects. The word “basket” occurs only 8 times in their 6-million-word corpus of parent-child talk, yet children manage to learn it effectively. There is a long, long tail of such words.

The proposed answer to the learning question focuses on three principles:

- Learners are controlling their own input

- Learners experience a constrained curriculum

- Experience comes in episodes

Each of these principles were reflected in the empirical data collected both in the lab and in the home setting - for example, by studying the time constant of autocorrelation of the head-mounted images at the pixel and semantic levels.

After her talk, someone in the audience asked about the implications of her work for curriculum design for parents. She had a very nice answer: “Unless you really know what you’re doing, just love your child and follow them along.”

For more detail on Lisa’s work, go to the Cognitive Development Lab website.

Test of Time award

The winner of the Test of Time award, given to a NeurIPS paper published ten years ago, was Distributed Representations of Words and Phrases and their Compositionality by Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg Corrado, and Jeffrey Dean.

This paper contains several ideas which have now become standard practice in NLP.

The authors broke down the key learnings from the paper as:

- Semi-supervised objectives applied to large text corpora are the key to natural language understanding.

- Fast, parallel, weakly synchronised computation dominates in ML. (conveniently, some of the authors were also working on DistBelief around the same time, giving them a way to achieve this large-scale asynchronous training)

- Focus compute where it matters. (e.g. rare vs common tokens)

- Tokenisation can be used to solve seemingly nuanced problems.

- Treating language as a sequence of dense vectors is more powerful than expected.

Efficient Learning

This session was absolutely packed. Continuing the theme of “do more with less” from earlier in the week, the efficient learning oral session featured four talks which all tackled efficiency from different perspectives.

Excitingly, the approaches can all yield significant benefits, and there’s no obvious reason why they couldn’t all be applied together, leading to compounding gains. I was only able to attend the first three talks; the paper for the fourth talk can be found here.

Monarch Mixer

The Monarch Mixer paper achieves improvements motivated by an understanding of how linear algebra operations execute on current hardware, and was presented by Dan Fu.

Noting the quadratic complexity scaling inherent to Transformers (attention is quadratic in sequence length, MLPs quadratic in model dimension), the authors explore the question: can we find a performant architecture that is sub-quadratic in both sequence length and model dimension?

It turns out the answer is yes, and using products of block-diagonal matrices and a few other tricks they present an architecture with competitive performance on text encoding, image classification, and text decoding tasks while reducing parameter count by 25-50% and wall-clock time by up to an order of magnitude.

QLoRA: Efficient Finetuning of Quantized LLMs

QLoRA, presented by Tim Dettmers, approaches the problem of memory bloat in LLMs from an information-theoretical perspective.

Naively fine-tuning a 70B parameter language model takes 840GB of GPU memory, or 36 consumer GPUs. LoRA (prior work, leveraging Low Rank Adapters) takes that down to 154GB of GPU memory, or 8 consumer GPUs. QLoRA reduces that further to 46GB of GPU memory, or 2 consumer GPUs.

QLoRA introduces a new data type called 4-bit NormalFloat (NF4) that is information-theoretically optimal in this context. Weights are quantised to NF4, and the quantisation parameters themselves are then quantised again.

This makes fine-tuning 70B LLMs possible on a (high-end) consumer desktop with two RTX 3090/4090 GPUs, as well as leading to significant energy savings and inference latency improvements, all while maintaining performance equivalent to 16-bit models.

Scaling Data-Constrained Language Models

This paper, presented by Niklas Muennighoff, was a runner-up for the outstanding paper awards.

It acknowledges that LLM training traditionally doesn’t repeat data, and examines whether that really makes sense when we’re in a data-constrained regime.

The conclusions — for natural language tasks in English — are:

- Repeating training data for LLMs is actually fine up to a point, and 4 epochs is a good trade-off.

- Supplementing natural language data with up to 50% code data helps.

- Quality filtering (removing some training data) and then repeating that filtered data can actually be better than using all the training data directly.

It then goes on to propose some scaling equations for the repeated-data regime, analogous to the Chinchilla scaling laws for the single-epoch regime.

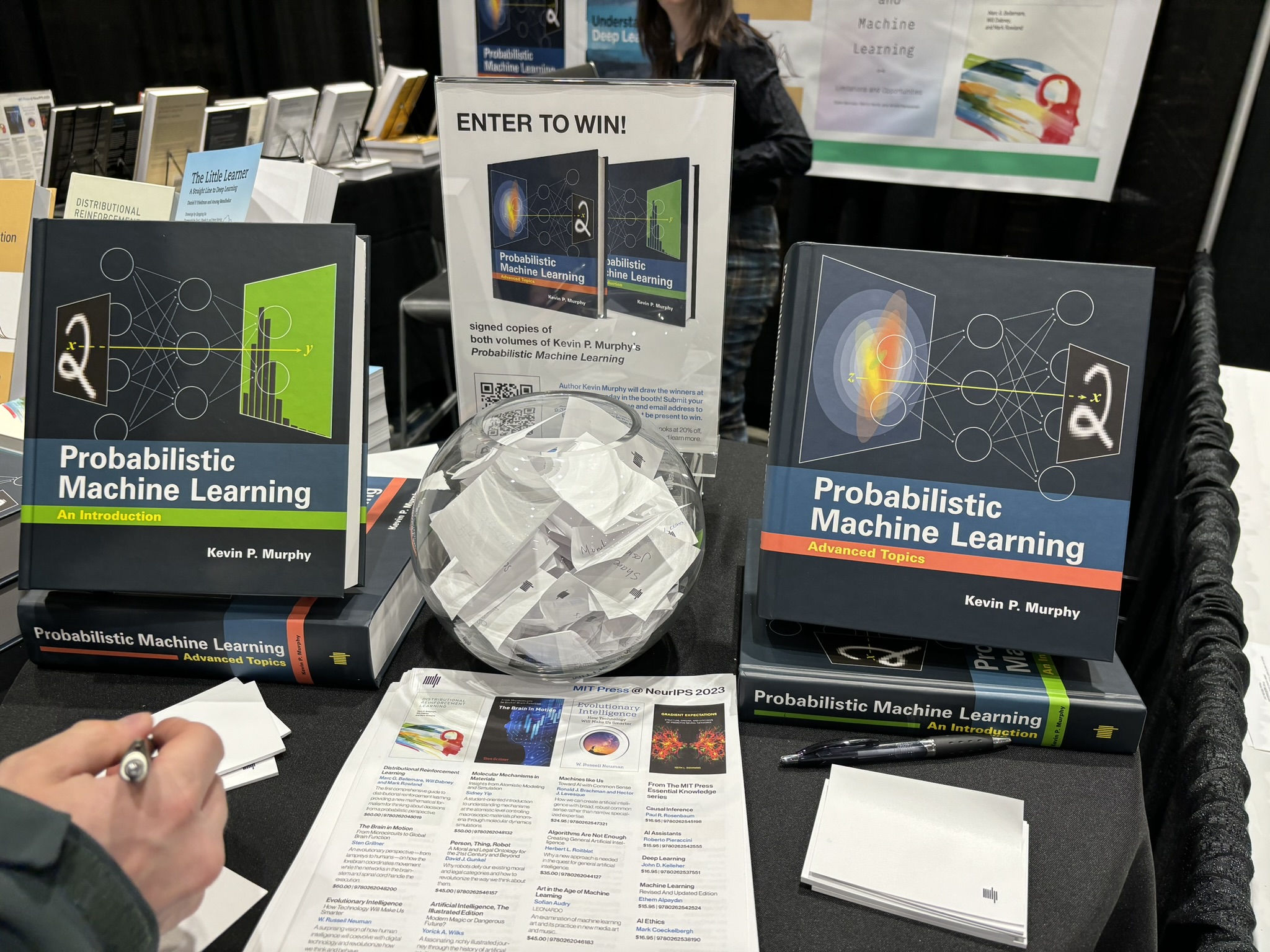

Books at NeurIPS

Highlights from the publishers in the exhibition hall.

MIT Press will have Kevin Murphy signing his Probabilistic Machine Learning books on Thursday from 9:30am. Go to their booth before that to enter a prize draw to win signed copies of both volumes, or check out their website for some limited-time discounts.

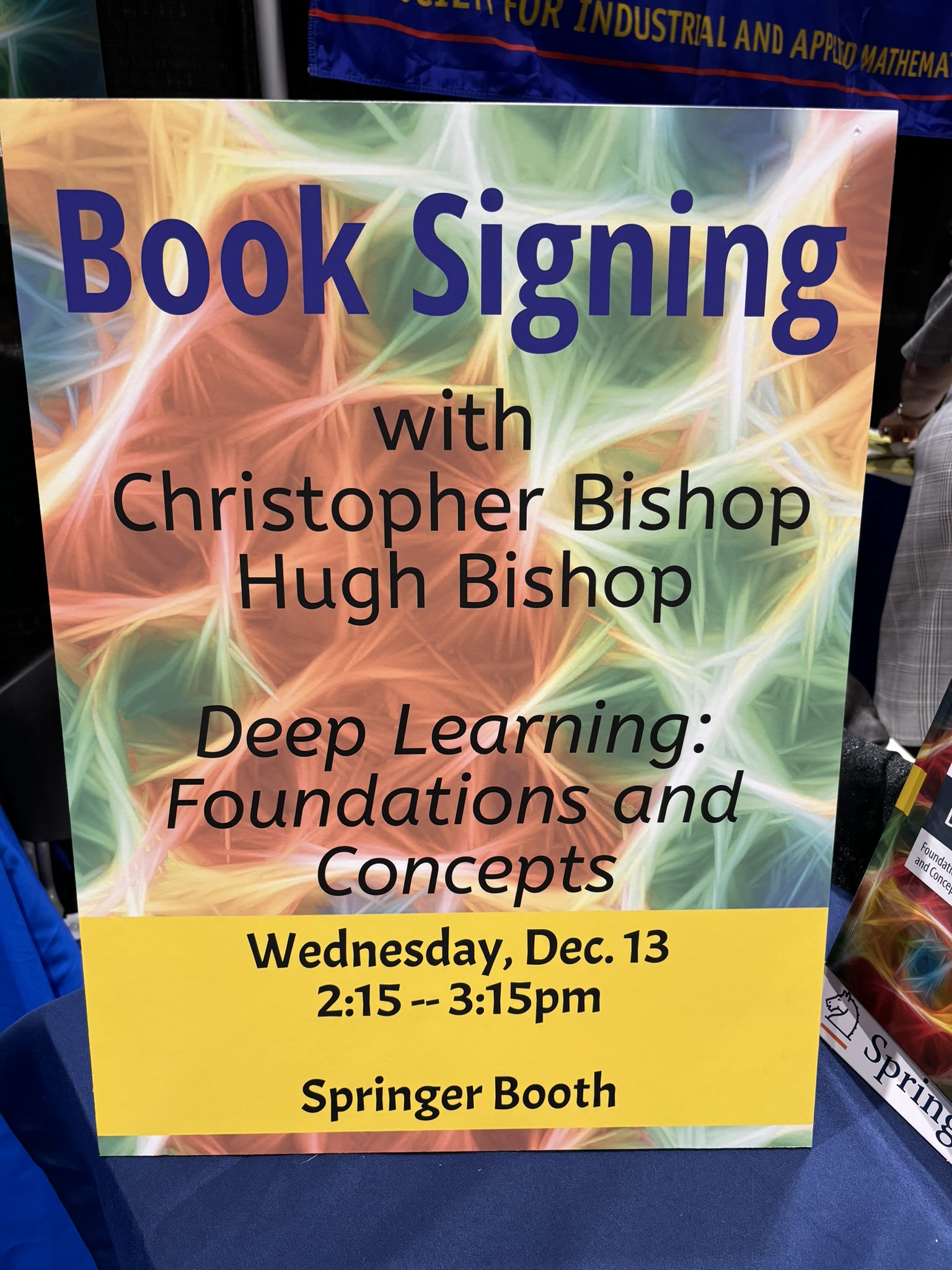

Springer will have Christopher Bishop at their booth 2:15-3:15pm on Wednesday to sign his Deep Learning: Foundations and Concepts book.

SIAM have a wide range of books on industrial and applied mathematics — useful for anyone wanting to brush up on foundational materials. The high performance scientific computing book pictured below introduces C, OpenMP, MPI, CUDA, and OpenCL.

Cambridge University Press also have countless interesting books, and are offering significant discounts during the conference. Tong Zhang’s Mathematical Analysis of ML Algorithms has been popular this week.

NeurIPS-adjacent news: Answer.AI

Jeremy Howard (FastAI/Kaggle) just announced that he’s launching a new AI R&D lab, Answer.AI, together with Eric Ries (Lean Startup/LTSE).

Jeremy is at NeurIPS this week, so if you’re interested in applied research, track him down and say hi. They’re not just looking for full-time people — they’re keen to support academics at every level as well.

More info on their launch blog post and on Twitter.

Two more days of the main conference track to follow, and then two days of workshops and competitions.

Read our blog from the main conference’s second day here, and keep an eye on our NeurIPS 2023 page for more updates.