ICRA 2023 took place in London from 29 May - 2 June 2023. The conference featured 12 competitions, and hundreds of competitors.

Competitions spanned simulation, remote evaluation, hackathons, and in-person challenges. Some competitions were highly accessible and had dozens of entrants; others required more knowledge or investment, and had fewer but more specialised teams.

Alongside this post on competitions at ICRA 2023, we have also just published a general post on Robotics Competitions, covering the motivations of organisers and participants, and categorising types of competitions.

In-Person Competitions

F1Tenth: Autonomous Racing

Setup

F1Tenth was one of the most accessible and engaging competitions, and the first one visible when entering the competitions hall.

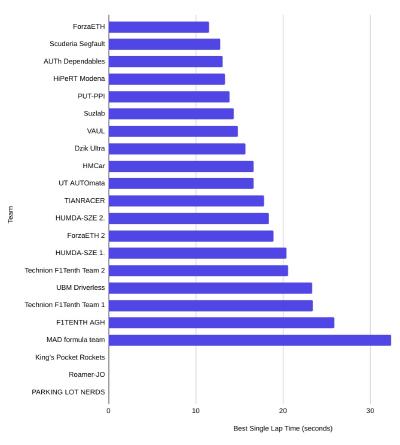

It’s a one-tenth scale autonomous race, and this edition — the 11th one so far — saw 22 teams from all over the world competing to have the fastest car.

This edition was a collaboration between TU Munich, UPenn, and King’s College London.

The particular track layout was unknown, so teams had to be prepared to adapt to whatever track layout and floor surface they encountered.

The competition recreates a lot of interesting aspects of Formula 1 racing:

- Teams get plenty of “free practice” time at the start to get to know the track, and tune their car or software as required.

- The first stage of the competition is a qualifying round, where teams take turns to set the fastest single-lap times.

- The second stage of the competition involves head-to-head racing (in the case of F1Tenth it’s 1v1 rather than all-v-all).

- Track parameters change over time! Participants noted that traction got worse as the track got dirtier over time.

- Having a great team, effective communication, and a good strategy for quick diagnostics and improvement was clearly key.

There is an upper limit on the size of the car, but teams have flexibility around the exact design, sensors, computing hardware, and driving software.

For a robotics competition, F1Tenth is really quite affordable. The LiDAR sensor that most of the teams used costs around $1,600, but some teams went with an alternative that costs around $400, allowing for a total build cost of under $1k. On the other hand, some well-resourced university teams had ten copies of their car in their lab, and had brought multiple copies with them for redundancy.

Approaches

Most teams drove purely based off 270° LiDAR, with few exceptions, and followed a relatively similar approach. This is likely because F1Tenth provides an excellent free online course to help competitors get started. Some of the approaches used in the competition are covered in this course — for example, the F1TENTH AGH team from Krakow University used the follow-the-gap method, driving based on the currently available LiDAR data, whereas many of the other teams opted to first do a round of mapping, allowing them to plan an optimal path through the whole track and then attempt to drive that. For compute hardware, most of the teams seemed to use either Intel NUCs or Nvidia Jetson variants.

The PARKING LOT NERDS team had the boldest and most unusual strategy, using a vision-based behavioural cloning approach. This involved them driving around the track manually, gathering data, and then training a neural net that learns a mapping from vision to control parameters from that. They also mentioned the Donkey Car and DIY Robocars projects as inspirations.

Team Dzik Ultra, a previous winner, ended up not being able to show off their latest improvements in the form of better obstacle detection/avoidance and reduced latency, as one of their components suffered a mechanical failure and they were not able to get a replacement shipped out in time. This was a shame, as they had put significant effort into several relatively low-level solutions, as well as rigorous benchmarking of their autonomous driving vs manual driving, and monitoring latency using high-frame-rate cameras.

Modena’s HiPeRT team and TU Wien’s Scuderia Segfault both looked very promising, and met in the quarter-finals after having qualified within 0.6s of each other. HiPeRT showed they share their Modena roots with Ferrari, with a great driving style and some bold overtakes earlier in the competition. After one round won by each of the teams (in a best-of-3 format), the final round was a nail-biter, and was very narrowly won by Scuderia Segfault. You can see some short videos of this round on Twitter.

Zurich’s Forza ETH were the favourites from the start, having qualified more than one second faster than all the other teams. They were experienced, well-organised, and well-resourced. They also presented a paper on automated driving at the conference. In addition to having a smart automation approach, they also took some key steps in manual tuning. They divided the track into elevel sectors, and manually tuned certain parameters for each sector — for example, maximum speed. This allowed them to quickly and effectively adjust their strategy to the track.

Notably, other than PARKING LOT NERDS, the use of deep learning was limited. Since most cars just used LiDAR, rather than cameras, there were no applications for off-the-shelf vision algorithms. The second team from Zurich’s ETH did make use of a small neural net as a more computationally efficient alternative to other model-predictive control methods, as presented in their poster titled “RPGD: A Small-Batch Parallel Gradient Descent Optimizer with Explorative Resampling for Nonlinear Model Predictive Control”.

As for the head-to-head part: some teams used exactly the same driving setup during racing as they did on qualifying, whereas others explicitly modeled the rival cars in order to be better at overtaking.

Results and Advice

Scuderia Segfault and ForzaETH both breezed through their semi-finals without conceding a round, putting the two teams with the fastest qualifying times in the finals. In the end ForzaETH won, but Scuderia Segfault raced well, improving on their previous results, and vowing to come back and win in future.

The organisers were really happy with the outcome of the competition, and particularly impressed with the reliability of the teams’ cars.

The top three teams shared some insights into their strategies, and some advice for future participants:

- Experience is super valuable! They all felt they improved a lot every time they took part.

- Work on reliability. You can’t win if you don’t finish…

- Get the basics right. Ensure the perception stack (localisation + opponent detection) is solid, so the planning and control components are working with the right information.

- Don’t get stuck in a strategic local minimum. Try new ideas and approaches once your method hits diminishing returns.

- When testing, look at lots of plots of your data, and try to really understand what is happening with your car as it’s driving around.

- Working well as a team and communicating effectively is critical, especially when last-minute technical decisions need to be made.

A few other notable points:

- There were a lot of crashes, particularly during practice sessions. This led to a track geometry that was constantly changing slightly, which caused issues for some teams who would need to remap when this happened.

- At one point, DZik Ultra’s car experienced a mechanical failure, and dumped some oil onto the track. This obviously affected the traction significantly, and required teams to tune their cars’ parameters to avoid crashing.

- Wi-Fi interference was a problem for many of the teams, as in many of the other competitions. Most teams used a mixture of Wi-Fi and Bluetooth to manage communication between their car, their remote control handset, and their laptop(s).

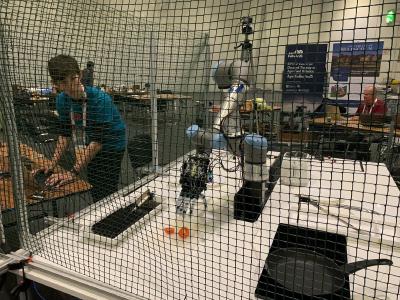

Autonomous Quadrupeds

The autonomous quadruped robot challenge (AQRC), at ICRA for the first time, was a collaboration between NIST and UKAEA RACE.

The goal of the competition was to support teams developing quadruped platforms, allowing them to do systematic testing, thereby pushing forward the state of the art for emergency response robots. The competition course was also a step towards building standards that can be used for certifying platforms and operators.

Seven teams took part in this competition, which aimed to have quadrupeds complete segments of an obstacle course autonomously, but also allowed the robots to be remotely operated where autonomy wasn’t possible. Points were awarded for both autonomy — how well can the robot operate without external input — and mobility — how well can the robot navigate particular obstacles, even if it needs to receive high-level input on the direction in which to travel.

KAIST’s team DreamSTEP, using DreamwaQ won the overall competition and autonomy part, with MIT’s Improbable AI performing the best in terms of mobility with their custom gait developed using reinforcement learning.

Videos of the competition are available here.

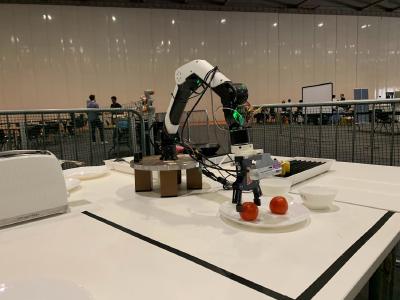

Cooking Breakfast

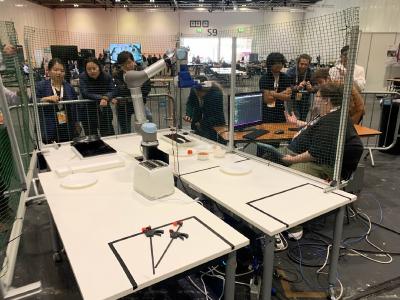

The PUB.R (Preparation and dish Up of an English Breakfast with Robots) competition, organised by the Lincoln Centre for Autonomous Systems, was almost certainly the most challenging competition of the conference, with an aspirational goal of a robot completing tasks in three stages:

- “Shopping” (gathering ingredients and transporting them to the cooking area)

- “Cooking” (chopping vegetables, heating ingredients on the hob, and plating them)

- “Serving” (transporting the plate to the judges’ table, and answering questions about the food using speech)

Teams could choose to skip certain stages totally (and forego all points), or complete certain subtasks manually (and forego some points). They would then be judged on how well they did at each of the stages. The breadth of work required in this competition was unusual and challenging (fine object manipulation, mobility, speech), but the ability for teams to choose which subtasks to attempt made up for that.

This was an in-person competition, and required the teams to transport their robots to the conference venue. This creates a significant hurdle to participation, but ensures that all teams are doing the same tasks in the same environment, and makes the competition much more engaging for spectators.

Three teams took part: CAMBRIDGE (from the University of Cambridge), DISCOVER (from Tsinghua University), and CREATE (from EPFL; École polytechnique fédérale de Lausanne).

After the qualification stage, teams DISCOVER and CREATE made it through to the finals, and the finals were very closely contested.

In the end, DISCOVER narrowly won, scoring 58% against CREATE’s 56%. The teams had quite different approaches, but both were very impressive.

EPFL’s team CREATE used a robot hand developed in their lab, as shown in Josie Hughes’ presentation at the Agrifood Robots Keynote. The hand’s motion is driven by tendons, and the fingers are somewhat flexible, which makes it particularly well-suited to grasping and manipulating delicate food items. The team decided to take part in the competition partly to showcase the capabilities of their custom-built hand. They did not attempt the phases of the challenge that required mobility — gathering ingredients and serving the plate — instead focusing on their strengths.

In order to adapt to the specific tasks of the competition, they used teaching (manually moving the hand to certain configurations suited to the tasks) combined with offsets for the actual location of the items. Their hand looked very impressive slicing tomatoes and carrying bread.

Tsinghua University’s team DISCOVER also used an entirely custom manipulator, and in fact their entire robot arm was built in their lab. Their entry was a collaboration with AgileX, and they attempted a broader range of tasks than CREATE. Part of their aim in taking part was to validate their hardware, as a step towards commercialisation, as well as a useful impetus to do the integration work required to complete the sorts of real-world tasks that made up the competition.

The judges were highly impressed with the entries. They acknowledged that handling food is a particularly hard problem, and that the teams did well considering the difficulty of the tasks. They feel like this was a good step towards a useful benchmark in this space.

Navigation (BARN)

The Benchmark Autonomous Robot Navigation (BARN) challenge had 5 in-person teams competing this year, selected from dozens of teams who took part in the simulation stage of the competition.

Like the F1Tenth competition, this challenge involves timed autonomous navigation through a new environment, but the BARN challenge the participants all use the same hardware (a Clearpath Jackal), and the environment features more obstacles and narrow passages.

The teams are provided with a simulator and 300 pre-generated environments.

This year’s competition was won by the team from KU Leuven and Flanders Make.

Assistive Robots

In the METRICS HEART-MET Physically Assistive Robot Challenge, the challenge centered around fetching an item for a person in a home. This involved several stages:

- Enter a recreation of a home set up in the conference hall, then find the people in the home.

- Approach the people, interrupt their conversation in a socially acceptable way, and ask if they would like the robot to fetch them anything.

- Fetch the requested item, and bring it to the person who requested it.

Like many other competitions, doing this outside a lab setting forced the competitors to face several new issues:

- There was significant ambient noise. Not only from robots in the other competition tracks, but also from other people standing around talking. This caused problems for almost all of the teams’ speech recognition systems.

- The hordes of people standing around watching the competition threw off the vision-based person-detection systems, and teams had to come up with innovative last-minute changes like angling the cameras down slightly to avoid picking up people outside the “home” environment.

- Wi-Fi interference meant that some teams unexpectedly had to run a cable between their robot and their laptop. In order to prevent tangling, a team member would then need to walk next to the robot holding the cable. But this also threw off the person-detection system!

- Some of these robots have built-in safety systems which allow users to create virtual “walls” by placing magnetic tape on the floor, constraining the area the robot can operate in. One of the teams with such a robot discovered that there was some tape like this under some areas of the conference hall floor, stopping their robot from moving!

These issues all led to lots of debugging, and all three teams made a lot of progress throughout the competition.

Manufacturing

The Manufacturing Robotics Challenge, organised by the University of Sheffield Advanced Manufacturing Research Centre with robot arms provided by Kuka, took a hackathon approach where competitors had to build their solutions during the conference. Out of a large group of applicants, 16 were selected to compete in person, forming three teams. The teams were arranged so that each team had a mix of participants with different experience levels.

The teams faced a number of challenges of incremental difficulty:

- Build the tallest Jenga tower using the robot arm, with the blocks starting in known positions

- Same as above, but with the blocks in dynamic positions and identified using a computer vision system

- The same as above, but with additional points for blocks of certain colours

Unlike most of the other competitions, this competition allowed people with a mix of robotics experience to gain first-hand experience using expensive industrial robot arms. It also allowed less experienced participants to learn from the more experienced people in their team.

Virtual/Remote Competitions

Grasping and Manipulation

The Robotic Grasping and Manipulation Competitions had two tracks: manufacturing, and cloth manipulation/perception.

Manufacturing

The Manufacturing track was organised by NIST, and centered around robots performing assembly operations on standardised assembly “task boards”.

These task boards provide a useful benchmark that can be used to compare robots.

Cloth Manipulation and Perception

The Cloth Manipulation and Perception track focused on building benchmarks for cloth manipulation, and entailed three separate tasks:

- Grasp point detection (purely perception)

- Unfolding a folded or crumpled cloth (perception and manipulation)

- Folding an unfolded cloth (perception and manipulation)

Four teams took part, and most teams attempted one or two of the three tasks. The actual competition took place in each team’s own lab, using fabric sent to them by the organisers. This allowed the teams to participate using their own custom hardware (unlike in the BARN challenge and RoboMaster Sim2Real), but without needing to ship it to the conference (like in many of the other competitions).

In the end, the team from Ljubljana won the perception task, and the team from Ghent won both manipulation tasks.

The teams all had quite different approaches. Of the teams that took part in the manipulation tasks, one team had two separate hands with flexible grippers, whereas the other team had a single hand with two sliding grippers.

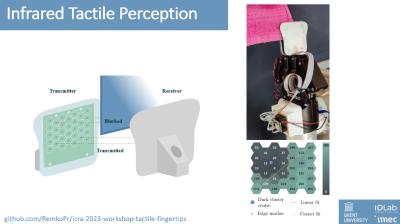

The competition demonstrated its organisers’ case for the need for standardised benchmarks in cloth manipulation. With limitations around simulation for fabric-like materials and tactile sensing, the sim-to-real gap in this space is currently larger than in more mature areas of robotics that deal with rigid materials. Considering the heterogeneity of the approaches (both in terms of manipulator design and control algorithms), the next few years in this space could bring exciting developments.

The competition also clearly reached its goals of fostering collaboration between the various teams, with lots of interesting discussions — for example, the team from Ghent sharing how they 3D-printed the flexible ends on their custom manipulators on the same 3D printer that the team from Imperial already have access to, just using a different material.

Human-Robot Collaboration

The Human-Robot Collaborative Assembly Challenge had five teams taking part remotely. Teams were allowed to use any sensor/hardware setup, as long as they used off-the-shelf components.

Sim2Real

Tsinghua University’s Robomaster University Sim2Real Challenge is an international competition open to all university students.

This iteration consisted of navigation and grasping challenges, centered around locating cubes tagged with certain numbers, picking them up, and placing them in the correct location.

Teams were able to develop their solutions in a simulator, and the competition took part in a lab in Beijing, with video streams viewable at ICRA in London.

Ethics

This year’s Roboethics Competition consisted of two parts:

- The “Ethics Challenge”, where teams wrote reports describing frameworks for overcoming ethical challenges in particular applications of robotics.

- The Hackathon, where teams try to implement the proposed frameworks. This took part during the conference, with both remote and in-person participants.

There were 9 proposals for the ethics challenge, and the two which were selected for the hackathon both focused on care home scenarios.

Simulation-based Competitions

Humanoid Robot Wrestling

The Humanoid Robot Wrestling competition was fully simulator-based, and open to anyone. Run by Cyberbotics in their open-source Webots simulator, the competition requires teams to program autonomous humanoid robots to engage in 1v1 wrestling matches.

The initial qualification phase whittled the field down from 69 to 32 teams using a ladder-based system, and the final 32 teams then faced off against each other in a single-elimination bracket before a winner was crowned - user “Chrake Peith”.

The matches can all be viewed on Cyberbotics’ YouTube Channel.

Interestingly, the organisers managed the competition through GitHub. Participants create a private repo with their strategy code, and give the organisers access to the repo. Every time a participant pushes a change to their code, GitHub Actions triggers a new match to happen based on their current ladder position. This match runs on the organisers’ own servers, allowing participants to use their GPU for model inference, and then the ladder is updated based on the match outcome.

The organisers shared their template under a generous open-source licence, hopefully enabling others to run simulation-based competitions like this with minimal additional setup. Using GitHub for user management bypasses some potential privacy/data handling concerns.

This was the first competition in the series, and the next one will be at IROS 2023 in October.

Picking, Stacking, and Assembly

The Virtual Manipulation Challenge, run by Fraunhofer IPA had three tracks: bin picking, bin stacking, and assembly. Each of the tracks took place in simulation in advance of the conference, with results presented at the conference.

This competition had a more industrial focus, looking to solve practical near-term problems. Information on each of the tracks is available on the competition website.

The Stacking track in particular seemed to have been an effective way to do practical research. The sponsors of this track, Vanderlande, mentioned that they took part in order to raise awareness of their problem within the research community. As a logistics firm, efficient packing is a key component of their work.

The bin stacking competition had several tasks that challenged participants to stack 3D objects efficiently within a box, with different lookahead scenarios (i.e. with/without planning ahead knowing what is coming) and for different ranges of item geometries (cuboid parcels vs arbitrary shapes). As well as cash prizes, the best parcel loading solution was selected to jointly explore product development alongside Vanderlande. We look forward to the outcome of the collaboration between Vanderlande and the winner of this track, Viroteq, a company that specialises in ML for logistics automation.

Other Robotics Competitions

We list ongoing robotics competitions on our competition listing page.

Other regular events that host interesting robotics competitions:

- IROS; the International Conference on Intelligent Robots and Systems. The 2023 edition is in Detroit, October 1-5.

- RoboCup; the 2023 edition is in Bordeaux, July 4-10.

- NeurIPS, a machine learning conference, also tends to have a few robotics conferences in its competition track. The 2023 edition is in New Orleans, December 10-16.

Alongside this post on competitions at ICRA 2023, we have also just published a general post on Robotics Competitions, covering the motivations of organisers and participants, and categorising types of competitions.

About ML Contests

For over three years now, ML Contests has provided a competition directory and shared insights on trends in the competitive machine learning and robotics space. To receive occasional updates with key insights like this conference summary, subscribe to our mailing list. You can also follow us on Twitter, or join our Discord community.